Monday, August 29, 2011

Check Checkbox using Descriptive programming

Option explicit

Dim qtp,flight_app,f,t,i,j,x,y

If not Window("text:= Flight Reservation:").Exist (2) = true Then

qtp = Environment("ProductDir")

Flight_app = "\samples\flight\app\flight4a.exe"

SystemUtil.Run qtp & Flight_app

Dialog("text:= Login").Activate

Dialog("text:= Login").WinEdit("attached text:= Agent Name:").Set "asdf"

Dialog("text:= Login").WinEdit("attached text:= Password:").SetSecure "4e2d605c46a3b5d32706b9ea1735d00e79319dd2"

Dialog("text:= Login").WinButton("text:= OK").Click

End If

Window("text:= Flight Reservation").Activate

Window("text:= Flight Reservation").Activex("Acx_name:= MaskEdBox","window id:=0").Type "121212"

f = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly From:").GetItemsCount

For i= 0 to f-1 step 1

Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").Select(i)

x =Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").GetROProperty("text")

t = Window("text:=Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetItemsCount

For J = 0 TO t-1 step 1

Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").Select(j)

y = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetROProperty("text")

If x <> y Then

Reporter.ReportEvent 0,"Res","Test passed"

else

Reporter.ReportEvent 1,"Res","Test Failed"

End If

Next

Next

Count Buttons of Flight reservation window

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0acd0c8d19e774cb573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0acd0c8d19e774cb573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Count Buttons of Flight reservation window

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0a573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0a573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Verify the Check point and if check point is true or false further process is handled using functional statement

Dim Str

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog"Login").WinEdit("Password:").SetSecure "4e2558dc476c1aef6477598"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").WinComboBox("Fly To:").Check CheckPoint("Fly To:_2")

Str = Window("Flight Reservation").WinComboBox("Fly To:").Check (CheckPoint("Fly To:"))

msgbox (Str)

If Str = true Then

process()

Else

exitaction()

End If

Private Function process()

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").Dialog("Flight Reservations").WinButton("OK").Click

exitaction()

End Function

Private Function exitaction()

Window("Flight Reservation").Close

End Function

Friday, August 19, 2011

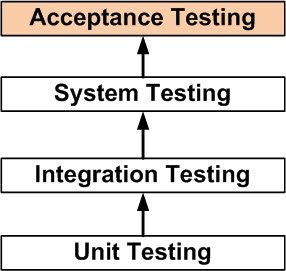

Acceptance Testing

DEFINITION

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

System Testing

DEFINITION

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

Integration Testing

DEFINITION

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Unit Testing

DEFINITION

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

when we have stop testing?

common factors in deciding when to stop are,

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Why we have to start testing early

Introduction :

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

Golden software testing rules

Introduction

Read these simple golden rules for software testing. They are based on years of practical testing

experience and solid theory.

Its all about finding the bug as early as possible:

Start software testing process as soon as you got the requirement specification document. Review the specification document carefully, get your queries resolved. With this you can easily find bugs in requirement document (otherwise development team might developed the software with wrong functionality) and many time this happens that requirement document gets changed when test team raise queries.

After requirement doc review, prepare scenarios and test cases.

Make sure you have atleast these 3 software testing levels

1. Integration testing or Unit testing (performed by dev team or separate white box testing team)

2. System testing (performed by professional testers)

3. Acceptance testing (performed by end users, sometimes Business Analyst and test leads assist end users)

Don’t expect too much of automated testing

Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and invalid solution. Consider - ROI.

Deal with resistance

Don't try to become popular by completing tasks before time and by loosing quality. Some testers do this and get appreciation from managers in early project cycles. But you should stick to the quality and perform quality testing. If you really tested the application fully, then you can go with numbers (count of bus reported, test case prepared, etc). Definitely your project partners will appreciate the great job you're doing!

Do regression testing every new release:

Once the development team is done with the bug fixes and give release to testing team, apart from the bug fixes, testing team should perform the regression testing as well. In early test cycles regression testing of entire application is required. In late testing cycles, when application is near UAT, discuss the impact of bug fixes with deal team and test the functionality as per that

Test With Real data:

Apart from invalid data entry, testers must test the application with real data. for this help can be taken from Business analyst and Client.

You can take help like these sites - http://www.fakenamegenerator.com/. But when it comes to Finance domain, request client for sample data, coz there can data like - $10.87 Million etc.

Keep track of change requests

Sometimes, in later test cycles everyone in the project become so busy and didn't get time to document the change requests. So in this situation, for testers (Test leads) I suggest to keep track of change requests (which happens thru email communication) in a separate excel document.

Also give the change requests a priority status:

Show stopper (must have, no work around)

Major (must have, work around possible)

Minor (not business critical, but wanted)

Nice to have

Actively use these above statuses for reporting and follow up!

Note - In CMMi or in process oriented companies, there are already change request management (configuration management systems)

Don't be a Quality Police:

Let Business analyst and technical managers to decide what bugs need to be fixed. Definetely testers can give inputs to them why this fix is required.

'Impact' and 'Chance' are the keys to decide on risk and priority

You should keep a helicopter view on your project. For each part of your application you have to

define the 'impact' and the 'chance' of anything going wrong.

'Impact' being what happens if a certain situation occurs.

What’s the impact of an airplane crashing?

'Chance' is the likelihood that something happens.

What’s the chance to an airplane crash?

Delivery to client:

Once the final testing cycle is completed or when the application is going for UAT, Test lead should discuss that these many bugs still persists (with priority) and let Technical manager (dev team), Product manager and business analyst to decide whether application needs to be deliver or not. Definetely testers can give inputs to them on the OPENED bugs.

Focus on the software testing process, not on the tools

Teta management and other testing tools make our tasks easy but these tools cannot perform testing. So instead of focusing on tools, focus on core software testing. You can be very successful by using basic tools like MS Excel.

Read these simple golden rules for software testing. They are based on years of practical testing

experience and solid theory.

Its all about finding the bug as early as possible:

Start software testing process as soon as you got the requirement specification document. Review the specification document carefully, get your queries resolved. With this you can easily find bugs in requirement document (otherwise development team might developed the software with wrong functionality) and many time this happens that requirement document gets changed when test team raise queries.

After requirement doc review, prepare scenarios and test cases.

Make sure you have atleast these 3 software testing levels

1. Integration testing or Unit testing (performed by dev team or separate white box testing team)

2. System testing (performed by professional testers)

3. Acceptance testing (performed by end users, sometimes Business Analyst and test leads assist end users)

Don’t expect too much of automated testing

Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and invalid solution. Consider - ROI.

Deal with resistance

Don't try to become popular by completing tasks before time and by loosing quality. Some testers do this and get appreciation from managers in early project cycles. But you should stick to the quality and perform quality testing. If you really tested the application fully, then you can go with numbers (count of bus reported, test case prepared, etc). Definitely your project partners will appreciate the great job you're doing!

Do regression testing every new release:

Once the development team is done with the bug fixes and give release to testing team, apart from the bug fixes, testing team should perform the regression testing as well. In early test cycles regression testing of entire application is required. In late testing cycles, when application is near UAT, discuss the impact of bug fixes with deal team and test the functionality as per that

Test With Real data:

Apart from invalid data entry, testers must test the application with real data. for this help can be taken from Business analyst and Client.

You can take help like these sites - http://www.fakenamegenerator.com/. But when it comes to Finance domain, request client for sample data, coz there can data like - $10.87 Million etc.

Keep track of change requests

Sometimes, in later test cycles everyone in the project become so busy and didn't get time to document the change requests. So in this situation, for testers (Test leads) I suggest to keep track of change requests (which happens thru email communication) in a separate excel document.

Also give the change requests a priority status:

Show stopper (must have, no work around)

Major (must have, work around possible)

Minor (not business critical, but wanted)

Nice to have

Actively use these above statuses for reporting and follow up!

Note - In CMMi or in process oriented companies, there are already change request management (configuration management systems)

Don't be a Quality Police:

Let Business analyst and technical managers to decide what bugs need to be fixed. Definetely testers can give inputs to them why this fix is required.

'Impact' and 'Chance' are the keys to decide on risk and priority

You should keep a helicopter view on your project. For each part of your application you have to

define the 'impact' and the 'chance' of anything going wrong.

'Impact' being what happens if a certain situation occurs.

What’s the impact of an airplane crashing?

'Chance' is the likelihood that something happens.

What’s the chance to an airplane crash?

Delivery to client:

Once the final testing cycle is completed or when the application is going for UAT, Test lead should discuss that these many bugs still persists (with priority) and let Technical manager (dev team), Product manager and business analyst to decide whether application needs to be deliver or not. Definetely testers can give inputs to them on the OPENED bugs.

Focus on the software testing process, not on the tools

Teta management and other testing tools make our tasks easy but these tools cannot perform testing. So instead of focusing on tools, focus on core software testing. You can be very successful by using basic tools like MS Excel.

Golden rules for bug reporting

Introduction

Read these simple golden rules for bug reporting. They are based on years of practical testing experience and solid theory.

Make one change request for every bug

- This will enable you to keep count of the number of bugs in the application

- You'll be able to give a priority on every bug separately

- You'll be able to test each resolved bug apart (and prevent having requests that are only resolved half)

Give step by step description of the problem:

E.g. "- I entered the Client page

- I performed a search on 'Google'

- In the Result page 2 clients were displayed with ‘Google’ in their name

- I clicked on the second one

---> The application returned a server error"

Explain the problem in plain language:

- Developers / re-testers don't necessarily have business knowledge

- Don't use business terminology

Be concrete

- Errors usually don't appear for every case you test

- What is the difference between this case (that failed) and other cases (that didn't fail)?

Give a clear explanation on the circumstances where the bug appeared

- Give concrete information (field names, numbers, names,...)

If a result is not as expected, indicate what is expected exactly

- Not OK : 'The message given in this screen is not correct"

- OK: 'The message that appears in the Client screen when entering a wrong Client number is "enter client number"

--> This should be: "Enter a valid client number please"

Explain why (in your opinion) the request is a "show stopper"

- Don't expect other contributors to the project always know what is important

- If you now a certain bug is critical, explain why!

Last but not least: don't forget to use screen shots!

- One picture says more than 1000 words

- Use advanced toold like SnagIt (www.techsmith.com/screen-capture.asp)

When testers follow these rules, it will be a real time and money saver for your project ! Don't expect the testers to know this by themselves. Explain these rules to them and give feedback when they do bad bug reporting!

Read these simple golden rules for bug reporting. They are based on years of practical testing experience and solid theory.

Make one change request for every bug

- This will enable you to keep count of the number of bugs in the application

- You'll be able to give a priority on every bug separately

- You'll be able to test each resolved bug apart (and prevent having requests that are only resolved half)

Give step by step description of the problem:

E.g. "- I entered the Client page

- I performed a search on 'Google'

- In the Result page 2 clients were displayed with ‘Google’ in their name

- I clicked on the second one

---> The application returned a server error"

Explain the problem in plain language:

- Developers / re-testers don't necessarily have business knowledge

- Don't use business terminology

Be concrete

- Errors usually don't appear for every case you test

- What is the difference between this case (that failed) and other cases (that didn't fail)?

Give a clear explanation on the circumstances where the bug appeared

- Give concrete information (field names, numbers, names,...)

If a result is not as expected, indicate what is expected exactly

- Not OK : 'The message given in this screen is not correct"

- OK: 'The message that appears in the Client screen when entering a wrong Client number is "enter client number"

--> This should be: "Enter a valid client number please"

Explain why (in your opinion) the request is a "show stopper"

- Don't expect other contributors to the project always know what is important

- If you now a certain bug is critical, explain why!

Last but not least: don't forget to use screen shots!

- One picture says more than 1000 words

- Use advanced toold like SnagIt (www.techsmith.com/screen-capture.asp)

When testers follow these rules, it will be a real time and money saver for your project ! Don't expect the testers to know this by themselves. Explain these rules to them and give feedback when they do bad bug reporting!

QTP Scripts

Script to finding out Broken link:

'Start of Code

Set a=Browser().Page().Link()

Dim URL,httprot

URL=a.GetROProperty("href")

Set httprot = CreateObject("MSXML2.XmlHttp")

httprot.open "GET",URL,FALSE

On Error Resume Next

httprot.send()

Print httprot.Status

If httprot.Status<>200 Then

msgbox "fail"

Else

msgbox "pass"

End If

Set httprot = Nothing

'End Of Code

---------------------------------------------------------

Get names of all open Browsers:

Set bDesc = Description.Create()

bDesc(“application version”).Value = “internet explorer 6″

Set bColl = DeskTop.ChildObjects(bDesc)

Cnt = bColl.Count

MsgBox “There are total:”&Cnt&”browsers opened”

For i = 0 To (Cnt -1)

MsgBox “Browser: “&i&” has title: “& bColl(i).GetROProperty(“title”)

Next ‘ i

Set bColl = Nothing

Set bDesc = Nothing

------------------------------------------------------------

QTP Scripts to captures a text of the Tooltip:

' Place mouse cursor over the link

Browser("Yahoo!").Page("Yahoo!").WebElement("text:=My Yahoo!").FireEvent "onmouseover"

wait 1

' Grab tooltip

ToolTip = Window("nativeclass:=tooltips_class32").GetROProperty("text")

Capturing Tooltip of the images:

Now, I'm going to show how to show how to capture tool tips of images located on a Web page.Actually, the solution is simple.

To capture a tool tip of an image, we can get value of "alt" Run-time Object property with GetROProperty("alt") function:

Browser("brw").Page("pg").GetROProperty("alt")

Let's verify this code in practice. For example, let's check tooltips from Wikipedia Main page

'Start of Code

Set a=Browser().Page().Link()

Dim URL,httprot

URL=a.GetROProperty("href")

Set httprot = CreateObject("MSXML2.XmlHttp")

httprot.open "GET",URL,FALSE

On Error Resume Next

httprot.send()

Print httprot.Status

If httprot.Status<>200 Then

msgbox "fail"

Else

msgbox "pass"

End If

Set httprot = Nothing

'End Of Code

---------------------------------------------------------

Get names of all open Browsers:

Set bDesc = Description.Create()

bDesc(“application version”).Value = “internet explorer 6″

Set bColl = DeskTop.ChildObjects(bDesc)

Cnt = bColl.Count

MsgBox “There are total:”&Cnt&”browsers opened”

For i = 0 To (Cnt -1)

MsgBox “Browser: “&i&” has title: “& bColl(i).GetROProperty(“title”)

Next ‘ i

Set bColl = Nothing

Set bDesc = Nothing

------------------------------------------------------------

QTP Scripts to captures a text of the Tooltip:

' Place mouse cursor over the link

Browser("Yahoo!").Page("Yahoo!").WebElement("text:=My Yahoo!").FireEvent "onmouseover"

wait 1

' Grab tooltip

ToolTip = Window("nativeclass:=tooltips_class32").GetROProperty("text")

Capturing Tooltip of the images:

Now, I'm going to show how to show how to capture tool tips of images located on a Web page.Actually, the solution is simple.

To capture a tool tip of an image, we can get value of "alt" Run-time Object property with GetROProperty("alt") function:

Browser("brw").Page("pg").GetROProperty("alt")

Let's verify this code in practice. For example, let's check tooltips from Wikipedia Main page

SDLC (Software Development Life Cycle)

SOFTWARE DEVELOPMENT LIFE CYCLE [SDLC] Information:

Software Development Life Cycle, or Software Development Process, defines the steps/stages/phases in the building of software.

There are various kinds of software development models like:

Waterfall model

Spiral model

Iterative and incremental development (like ‘Unified Process’ and ‘Rational Unified Process’)

Agile development (like ‘Extreme Programming’ and ‘Scrum’)

Models are evolving with time and the development life cycle can vary significantly from one model to the other. It is beyond the scope of this particular article to discuss each model. However, each model comprises of all or some of the following phases/activities/tasks.

SDLC IN SUMMARY

Project Planning

Requirements Development

Estimation

Scheduling

Design

Coding

Test Build/Deployment

Unit Testing

Integration Testing

User Documentation

System Testing

Acceptance Testing

Production Build/Deployment

Release

Maintenance

SDLC IN DETAIL

Project Planning

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Requirements Development [Business Requirements and Software/Product Requirements]

Develop

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Estimation [Size / Effort / Cost]

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Scheduling

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Designing[ High Level Design and Detail Design]

Coding

Code

Review

Rework

Commit

Recode [if necessary] >> Review >> Rework >> Commit

Test Builds Preparation/Deployment

Build/Deployment Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Build/Deploy

Unit Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Integration Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

User Documentation

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

System Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Acceptance Testing [ Internal Acceptance Test and External Acceptance Test]

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Production Build/Deployment

Build/Deployment Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Build/Deploy

Release

Prepare

Review

Rework

Release

Maintenance

Recode [Enhance software / Fix bugs]

Retest

Redeploy

Rerelease

Notes:

The life cycle mentioned here is NOT set in stone and each phase does not necessarily have to be implemented in the order mentioned.

Though SDLC uses the term ‘Development’, it does not focus just on the coding tasks done by developers but incorporates the tasks of all stakeholders, including testers.

There may still be many other activities/ tasks which have not been specifically mentioned above, like Configuration Management. No matter what, it is essential that you clearly understand the software development life cycle your project is following. One issue that is widespread in many projects is that software testers are involved much later in the life cycle, due to which they lack visibility and authority (which ultimately compromises software quality).

Software Development Life Cycle, or Software Development Process, defines the steps/stages/phases in the building of software.

There are various kinds of software development models like:

Waterfall model

Spiral model

Iterative and incremental development (like ‘Unified Process’ and ‘Rational Unified Process’)

Agile development (like ‘Extreme Programming’ and ‘Scrum’)

Models are evolving with time and the development life cycle can vary significantly from one model to the other. It is beyond the scope of this particular article to discuss each model. However, each model comprises of all or some of the following phases/activities/tasks.

SDLC IN SUMMARY

Project Planning

Requirements Development

Estimation

Scheduling

Design

Coding

Test Build/Deployment

Unit Testing

Integration Testing

User Documentation

System Testing

Acceptance Testing

Production Build/Deployment

Release

Maintenance

SDLC IN DETAIL

Project Planning

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Requirements Development [Business Requirements and Software/Product Requirements]

Develop

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Estimation [Size / Effort / Cost]

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Scheduling

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Designing[ High Level Design and Detail Design]

Coding

Code

Review

Rework

Commit

Recode [if necessary] >> Review >> Rework >> Commit

Test Builds Preparation/Deployment

Build/Deployment Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Build/Deploy

Unit Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Integration Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

User Documentation

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

System Testing

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Acceptance Testing [ Internal Acceptance Test and External Acceptance Test]

Test Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Test Cases/Scripts

Prepare

Review

Rework

Baseline

Execute

Revise [if necessary] >> Review >> Rework >> Baseline >> Execute

Production Build/Deployment

Build/Deployment Plan

Prepare

Review

Rework

Baseline

Revise [if necessary] >> Review >> Rework >> Baseline

Build/Deploy

Release

Prepare

Review

Rework

Release

Maintenance

Recode [Enhance software / Fix bugs]

Retest

Redeploy

Rerelease

Notes:

The life cycle mentioned here is NOT set in stone and each phase does not necessarily have to be implemented in the order mentioned.

Though SDLC uses the term ‘Development’, it does not focus just on the coding tasks done by developers but incorporates the tasks of all stakeholders, including testers.

There may still be many other activities/ tasks which have not been specifically mentioned above, like Configuration Management. No matter what, it is essential that you clearly understand the software development life cycle your project is following. One issue that is widespread in many projects is that software testers are involved much later in the life cycle, due to which they lack visibility and authority (which ultimately compromises software quality).

Defect

DEFINITION

A Software Bug / Defect is a condition in a software product which does not meet a software requirement (as stated in the requirement specifications) or end-user expectations (which may not be specified but are reasonable). In other words, a bug is an error in coding or logic that causes a program to malfunction or to produce incorrect/unexpected results.

A program that contains a large number of bugs is said to be buggy.

Reports detailing bugs in software are known as bug reports.

Applications for tracking bugs are known as bug tracking tools.

The process of finding the cause of bugs is known as debugging.

The process of intentionally injecting bugs in a software program, to estimate test coverage by monitoring the detection of those bugs, is known as bebugging.

Software Testing proves that bugs exist but NOT that bugs do not exist.

CLASSIFICATION

Software Bugs /Defects are normally classified as per:

Severity / Impact

Probability / Visibility

Priority / Urgency

Related Module / Component

Related Dimension of Quality

Phase Detected

Phase Injected

Severity/Impact

Severity indicates the impact of a bug on the quality of the software. This is normally set by the Software Tester himself/herself.

Critical:

There is no workaround.

Affects critical functionality or critical data.

Example: Unsuccessful installation, complete failure of a feature.

Major:

There is a workaround but is not obvious and is difficult.

Affects major functionality or major data.

Example: A feature is not functional from one module but the task is doable if 10 complicated indirect steps are followed in another module/s.

Minor:

There is an easy workaround.

Affects minor functionality or non-critical data.

Example: A feature that is not functional in one module but the task is easily doable from another module.

Trivial:

There is no need for a workaround.

Does not affect functionality or data.

Does not impact productivity or efficiency.

Example: Layout discrepancies, spelling/grammatical errors.

Severity is also denoted as S1 for Critical, S2 for Major and so on.

The examples above are only guidelines and different organizations/projects may define severity differently for the same types of bugs.

Probability / Visibility

Probability / Visibility indicates the likelihood of a user encountering the bug.

High: Encountered by all or almost all the users of the feature

Medium: Encountered by about 50% of the users of the feature

Low: Encountered by no or very few users of the feature

The measure of Probability/Visibility is with respect to the usage of a feature and not the overall software. Hence, a bug in a rarely used feature can have a high probability if the bug is easily encountered by users of the feature. Similarly, a bug in a widely used feature can have a low probability if the users rarely detect it.

Priority / Urgency

Priority indicates the importance or urgency of fixing the bug. Though this may be initially set by the Software Tester himself/herself, the priority is finalized by the Project Manager.

Urgent: Must be fixed prior to next build

High: Must be fixed prior to next release

Medium: May be fixed after the release/ in the next release

Low: May or may not be fixed at all

Priority is also denoted as P1 for Urgent and so on.

Normally the following are considered when determining the priority of bugs

Severity/Impact

Probability/Visibility

Available Resources (Developers to fix and Testers to verify the fixes)

Available Time (Time for fixing, verifying the fixes and performing regression tests after the verification of the fixes)

If a release is already scheduled and if bugs with critical/major severity and high probability are still not fixed, the release is usually postponed.

If a release is already scheduled and if bugs with minor/low severity and medium/low probability are not fixed, the release is usually made by mentioning them as Known Issues/Bugs. They are normally catered to in the next release cycle. Nevertheless, any project’s goal should be to make releases will all detected defects fixed.

Related Module /Component

Related Module / Component indicates the module or component of the software where the bug was detected. This provides information on which module / component is buggy or risky.

Module/Component A

Module/Component B

Module/Component C

…

Related Dimension of Quality

Related Dimension of Quality indicates the aspect of software quality that the bug is connected with.

Functionality

Usability

Performance

Security

Compatibility

…

Phase Detected

Phase Detected indicates the phase in the software development lifecycle where the bug was identified.

Unit Testing

Integration Testing

System Testing

Acceptance Testing

Phase Injected

Phase Injected indicates the phase in the software development lifecycle where the bug was introduced. Phase Injected is always earlier in the software development lifecycle than the Phase Detected. Phase Injected can be known only after a proper root-cause analysis of the bug.

Requirements Development

High Level Design

Detailed Design

Coding

Build/Deployment

Note that the categorizations above are just guidelines and it is up to the project/organization to decide on what kind of categorization to use. In most cases the categorization depends on the bug tracking tool that is being used. It is essential that project members agree beforehand on the categorization (and the meaning of each categorization) to be used so as to avoid arguments, conflicts, and unhealthy bickering later.

A BUG JOKE

“There is a bug in this ant’s farm.”

“What do you mean? I don’t see any ants in it.”

“Well, that’s the bug.”

A BUG STORY

Once upon a time, in a jungle, there was a little bug. He was young but very smart. He quickly learned the tactics of other bugs in the jungle: how to bring maximum destruction to the plants; how to effectively pester the animals; and most importantly, how to maneuver underground so as to avoid detection. Soon, the little bug was famous / notorious for his ‘severity’. All the bugs in the jungle hailed him as the Lord of the Jungle. Others feared him as the Scourge of the Jungle and mothers started taking his name to deter their children from going out in the night.

The Jungle Council, headed by the Lion, announced a hefty prize for anyone being able to capture the bug but no one was yet successful in capturing, or even sighting, the bug. The bug was a living legend.

For years, the bug basked in glory and he swelled with pride day by day. One day, when the Lion was away hunting, he burrowed to the top of the Lion’s hill and, standing atop the hill, he roared “I have captured the lily-livered Lion’s domain. I am now the true King of the Jungle! I am the Greatest! I am Invincible!”

His words resonated through the jungle and life stood still for a moment in sheer awe of the bug’s capabilities. Just then, it so happened that a Tester was passing by the Jungle and he promptly submitted a bug report with the exact longitude and latitude of the bug’s location. Then, a Developer hurriedly ‘fixed’ the bug (The bug was so swollen up after his boastful speech that he could not squeeze himself back into the burrow on time.) and that was the tragic end of the legendary bug.

NOTE: We prefer the term ‘Defect’ over the term ‘Bug’ because ‘Defect’ is more comprehensive.

A Software Bug / Defect is a condition in a software product which does not meet a software requirement (as stated in the requirement specifications) or end-user expectations (which may not be specified but are reasonable). In other words, a bug is an error in coding or logic that causes a program to malfunction or to produce incorrect/unexpected results.

A program that contains a large number of bugs is said to be buggy.

Reports detailing bugs in software are known as bug reports.

Applications for tracking bugs are known as bug tracking tools.

The process of finding the cause of bugs is known as debugging.

The process of intentionally injecting bugs in a software program, to estimate test coverage by monitoring the detection of those bugs, is known as bebugging.

Software Testing proves that bugs exist but NOT that bugs do not exist.

CLASSIFICATION

Software Bugs /Defects are normally classified as per:

Severity / Impact

Probability / Visibility

Priority / Urgency

Related Module / Component

Related Dimension of Quality

Phase Detected

Phase Injected

Severity/Impact

Severity indicates the impact of a bug on the quality of the software. This is normally set by the Software Tester himself/herself.

Critical:

There is no workaround.

Affects critical functionality or critical data.

Example: Unsuccessful installation, complete failure of a feature.

Major:

There is a workaround but is not obvious and is difficult.

Affects major functionality or major data.

Example: A feature is not functional from one module but the task is doable if 10 complicated indirect steps are followed in another module/s.

Minor:

There is an easy workaround.

Affects minor functionality or non-critical data.

Example: A feature that is not functional in one module but the task is easily doable from another module.

Trivial:

There is no need for a workaround.

Does not affect functionality or data.

Does not impact productivity or efficiency.

Example: Layout discrepancies, spelling/grammatical errors.

Severity is also denoted as S1 for Critical, S2 for Major and so on.

The examples above are only guidelines and different organizations/projects may define severity differently for the same types of bugs.

Probability / Visibility

Probability / Visibility indicates the likelihood of a user encountering the bug.

High: Encountered by all or almost all the users of the feature

Medium: Encountered by about 50% of the users of the feature

Low: Encountered by no or very few users of the feature

The measure of Probability/Visibility is with respect to the usage of a feature and not the overall software. Hence, a bug in a rarely used feature can have a high probability if the bug is easily encountered by users of the feature. Similarly, a bug in a widely used feature can have a low probability if the users rarely detect it.

Priority / Urgency

Priority indicates the importance or urgency of fixing the bug. Though this may be initially set by the Software Tester himself/herself, the priority is finalized by the Project Manager.

Urgent: Must be fixed prior to next build

High: Must be fixed prior to next release

Medium: May be fixed after the release/ in the next release

Low: May or may not be fixed at all

Priority is also denoted as P1 for Urgent and so on.

Normally the following are considered when determining the priority of bugs

Severity/Impact

Probability/Visibility

Available Resources (Developers to fix and Testers to verify the fixes)

Available Time (Time for fixing, verifying the fixes and performing regression tests after the verification of the fixes)

If a release is already scheduled and if bugs with critical/major severity and high probability are still not fixed, the release is usually postponed.

If a release is already scheduled and if bugs with minor/low severity and medium/low probability are not fixed, the release is usually made by mentioning them as Known Issues/Bugs. They are normally catered to in the next release cycle. Nevertheless, any project’s goal should be to make releases will all detected defects fixed.

Related Module /Component

Related Module / Component indicates the module or component of the software where the bug was detected. This provides information on which module / component is buggy or risky.

Module/Component A

Module/Component B

Module/Component C

…

Related Dimension of Quality

Related Dimension of Quality indicates the aspect of software quality that the bug is connected with.

Functionality

Usability

Performance

Security

Compatibility

…

Phase Detected

Phase Detected indicates the phase in the software development lifecycle where the bug was identified.

Unit Testing

Integration Testing

System Testing

Acceptance Testing

Phase Injected

Phase Injected indicates the phase in the software development lifecycle where the bug was introduced. Phase Injected is always earlier in the software development lifecycle than the Phase Detected. Phase Injected can be known only after a proper root-cause analysis of the bug.

Requirements Development

High Level Design

Detailed Design

Coding

Build/Deployment

Note that the categorizations above are just guidelines and it is up to the project/organization to decide on what kind of categorization to use. In most cases the categorization depends on the bug tracking tool that is being used. It is essential that project members agree beforehand on the categorization (and the meaning of each categorization) to be used so as to avoid arguments, conflicts, and unhealthy bickering later.

A BUG JOKE