Different Message types should be displayed differently. I came across one website and I got to know that every webapplication and windows based application handle 4 main message types such as Information,Successful Operation, Warning Message and Error message. Each message type should be presented in a different color and different icon. A special message type represents Validation messages.

1.Information Messages

The purpose of information messages is to inform the user about something relevant. This should be presented in blue because people associate this color with information, Regardless of content. This could be any information relevant to a user action

Informational Messages

For example, info message can show help information regarding current user (Or) some tips.

2.Success messages

Success messages should be displayed after user successful performs an operation. By that I mean a complete operation – no partial operations and no errors. For example, the messagecan say: “Your profile has been saved successfully and confirmation mail has been sent to

email address you provided”. This means that each operation in this process ( saving profile and sending email) has been successfully performed.

Success Messages

To show this message type using its own colors and icons – green with a check mark icon

3.Warning Messages

Warning messages should be displayed to a user when an operation could not completed in a whole .For example “Your profile has been saved successfully,But But confirmation mail could not be sent to the email address you provided.” Or “If you dont finish your Profile now you wont be able to search jobs”. Usual warning color is yellow and icon exclamation.

4.Error messages

Error messages should be displayed when an operation could not be completed at all.For example , “Red is very suitable for this since people associate this color with an alert of any kind.

5. Validation Messages

This Article author noticed that many developers cant distinguish between validation and other message types ( such as error or warning messages). I saw many times that validation message is displayed as error message and caused confusion in the users's mind.

Validation is all about user input and should be treated that way. ASP.NET has built in controls that enable full control over user input. The purpose of validation is to force a user to enter all required fields or to enter in the correct format. Therefore it should be clear that the form will not be submitted if these rules are not matched.

That's why I like to style validation messages in a slightly less intensive red than error messages and use a red exclamation icon.

Monday, December 19, 2011

Tuesday, September 27, 2011

Ten Tips for effective bug tracking

1.Remember that the only person who can close a bug is the person who findout first.Anyone can resolve it,but only the person who saw the bug can really be sure that when they saw is fixed.

2.A good tester will always try to reduce the reproduction steps to the minimal steps to reproduce.this is extremely helpful for the programmer who has to find the bug.

3.There are many ways to resolve a bug. Developer can resolve a bug as fixed,wont fix,postponed,not pro,duplicate or by design

4.You will want to keep careful track of versions. Every build of the sofware that you give to testers should have a build ID Number so that the poor tester doesnt have to retest the bug on a version of the software where it wasnot even supposed to be fixed.

5.Not reproduce means that nobody could ever reproduce the bug. Programmers often use this when the bug report is missing the reproduce steps.

6.If yoy are a programmer and you are having trouble getting testers to use the bug database, just dont accept bug reports by any other method. If your testers are used to sending you email with bug reports, just bounce the emails back to them with a brief message: “ please put this in the bug database. I cant keep track of emails.

7.If you are a tester, and you are having trouble getting programmers to use the bug database, just dont tell them about bugs – put them in the database and let the database email them.

8.If your are a programmer, and only some of your colleagues use the bug database, just start assigning them bugs in the database. Eventually they will get the hint.

9.If you are a manager, and nobody seems to be using the bug database that you installed at great expense, start assigning new features to people using bug. A bug database is alos a great “unimplemented feature” database, too.

10.Avoid the temptation to add new fields to the bug database.Every month or so, somebody will come up with a great idea for a new field to put in the database. You get all kinds of clever ideas, for example, keeping track of file where the bug was found; keeping track of what % of the time the bug is reproducible; keeping track of how many times the bug occured ; keeping track of which exact versions of which DLLs were installed on the machine where the bug happend. Its very important not to give in to these ideas. If you do , your new bug entry screen will end up with a thousand fields that you need to supply, and nobody will want to input bug reports any more. For the bug database to work, everybody needs to use it, and if entering bugs “formally” is too mush work, people will go around the bug database.

2.A good tester will always try to reduce the reproduction steps to the minimal steps to reproduce.this is extremely helpful for the programmer who has to find the bug.

3.There are many ways to resolve a bug. Developer can resolve a bug as fixed,wont fix,postponed,not pro,duplicate or by design

4.You will want to keep careful track of versions. Every build of the sofware that you give to testers should have a build ID Number so that the poor tester doesnt have to retest the bug on a version of the software where it wasnot even supposed to be fixed.

5.Not reproduce means that nobody could ever reproduce the bug. Programmers often use this when the bug report is missing the reproduce steps.

6.If yoy are a programmer and you are having trouble getting testers to use the bug database, just dont accept bug reports by any other method. If your testers are used to sending you email with bug reports, just bounce the emails back to them with a brief message: “ please put this in the bug database. I cant keep track of emails.

7.If you are a tester, and you are having trouble getting programmers to use the bug database, just dont tell them about bugs – put them in the database and let the database email them.

8.If your are a programmer, and only some of your colleagues use the bug database, just start assigning them bugs in the database. Eventually they will get the hint.

9.If you are a manager, and nobody seems to be using the bug database that you installed at great expense, start assigning new features to people using bug. A bug database is alos a great “unimplemented feature” database, too.

10.Avoid the temptation to add new fields to the bug database.Every month or so, somebody will come up with a great idea for a new field to put in the database. You get all kinds of clever ideas, for example, keeping track of file where the bug was found; keeping track of what % of the time the bug is reproducible; keeping track of how many times the bug occured ; keeping track of which exact versions of which DLLs were installed on the machine where the bug happend. Its very important not to give in to these ideas. If you do , your new bug entry screen will end up with a thousand fields that you need to supply, and nobody will want to input bug reports any more. For the bug database to work, everybody needs to use it, and if entering bugs “formally” is too mush work, people will go around the bug database.

Wednesday, September 21, 2011

Synchronize for a particular object

// Function to Synchronize for a particular object//

Public function fnSynchronization(objName)

fnSynchronization = false

Dim intLoopStart

Dim intLoopwait

intLoopStart = 1

intLoopwait = 10

Set objName = objName

// waiting for the object to appear//

Do while intLoopStart <= intLoopwait

// wait for existance of that object//

If opbjName.Exist(1) then

fnSynchronization = true

Exit do

Else

intLoopStart = intLoopStart+1

End if

Loop

End Function

Public function fnSynchronization(objName)

fnSynchronization = false

Dim intLoopStart

Dim intLoopwait

intLoopStart = 1

intLoopwait = 10

Set objName = objName

// waiting for the object to appear//

Do while intLoopStart <= intLoopwait

// wait for existance of that object//

If opbjName.Exist(1) then

fnSynchronization = true

Exit do

Else

intLoopStart = intLoopStart+1

End if

Loop

End Function

Verify the items exists in Drop down box

Public Function fnVerifyDroDownItems(objDropdown,strItemsToSearch)

Dim intItemsCount,intCounter,strItem,bInItemPresent

Dim arrItemsToSerach

fnVerifyDropDownItems = True

If (objDropdown.Exist = False) then

reporter.report micFail,"The Dropdown box '" & objDropdown.GetROProperty("name") & "' should Exist", "The Dropdown Box does not exist", "FAIL")

fnVerifyDropDownItems=False

Exit Function

End If

// Get count of items in dropdown //

intItemsCount = objDropdown.GetTOProperty("items count")

// Split the list items list based on comma(,)//

arrItemsToSearch = Split(strItemsToSearch, ",")

For intItems=0 to UBound(arrItemsToSearch)

blnItemPresent = False

// Loop through all items //

For intCounter=1 to intItemsCount

strItem = ""

// Get an item //

strItem = objDropdown.GetItem(intCounter)

// If the search item is present //

If (StrComp(Trim(strItem), Trim(arrItemsToSearch(intItems)), 1) = 0) Then

blnItemPresent = True

reporter.report micPass "The Item '" & arrItemsToSearch(intItems) & "' should be present in the dropdown box", "The specified Item exists in the dropdown box", "PASS")

Exit For

End If

Next

If (Not blnItemPresent) Then

reporter.report micFail , "The Item '" & arrItemsToSearch(intItems) & "' should be present in the dropdown box", "The specified Item does not exist in the dropdown box", "WARNING")

fnVerifyDropDownItems = False

End If

Next

End Function

Dim intItemsCount,intCounter,strItem,bInItemPresent

Dim arrItemsToSerach

fnVerifyDropDownItems = True

If (objDropdown.Exist = False) then

reporter.report micFail,"The Dropdown box '" & objDropdown.GetROProperty("name") & "' should Exist", "The Dropdown Box does not exist", "FAIL")

fnVerifyDropDownItems=False

Exit Function

End If

// Get count of items in dropdown //

intItemsCount = objDropdown.GetTOProperty("items count")

// Split the list items list based on comma(,)//

arrItemsToSearch = Split(strItemsToSearch, ",")

For intItems=0 to UBound(arrItemsToSearch)

blnItemPresent = False

// Loop through all items //

For intCounter=1 to intItemsCount

strItem = ""

// Get an item //

strItem = objDropdown.GetItem(intCounter)

// If the search item is present //

If (StrComp(Trim(strItem), Trim(arrItemsToSearch(intItems)), 1) = 0) Then

blnItemPresent = True

reporter.report micPass "The Item '" & arrItemsToSearch(intItems) & "' should be present in the dropdown box", "The specified Item exists in the dropdown box", "PASS")

Exit For

End If

Next

If (Not blnItemPresent) Then

reporter.report micFail , "The Item '" & arrItemsToSearch(intItems) & "' should be present in the dropdown box", "The specified Item does not exist in the dropdown box", "WARNING")

fnVerifyDropDownItems = False

End If

Next

End Function

fetch cell value from table

Dim X

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Frame("Frame").Check CheckPoint("Frame")

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Frame("Frame").WebElement("Class 1 to Class 12 Lessons,").FireEvent "onmouseover",718,13

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Link("A Trigonometric Table").Click 66,12

Browser("S.O.S. Math - Mathematical").Navigate "http://www.sosmath.com/tables/trigtable/trigtable.html"

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").Check CheckPoint("Frame_2")

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").Link("Class 1 to Class 12").FireEvent "onmouseover",42,1

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").WebElement("Lessons, Animations, Videos").FireEvent "onmouseover",45,8

Browser("S.O.S. Math - Mathematical").Page("Trig Table").WebTable("0").Check CheckPoint("0")

x = Browser("S.O.S. Math - Mathematical").Page("Trig Table").WebTable("0").GetCellData(2,4)

msgbox " value is." & x

Browser("S.O.S. Math – Mathematical").CloseAllTabs

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Frame("Frame").Check CheckPoint("Frame")

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Frame("Frame").WebElement("Class 1 to Class 12 Lessons,").FireEvent "onmouseover",718,13

Browser("S.O.S. Math - Mathematical").Page("S.O.S. Math - Mathematical").Link("A Trigonometric Table").Click 66,12

Browser("S.O.S. Math - Mathematical").Navigate "http://www.sosmath.com/tables/trigtable/trigtable.html"

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").Check CheckPoint("Frame_2")

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").Link("Class 1 to Class 12").FireEvent "onmouseover",42,1

Browser("S.O.S. Math - Mathematical").Page("Trig Table").Frame("Frame").WebElement("Lessons, Animations, Videos").FireEvent "onmouseover",45,8

Browser("S.O.S. Math - Mathematical").Page("Trig Table").WebTable("0").Check CheckPoint("0")

x = Browser("S.O.S. Math - Mathematical").Page("Trig Table").WebTable("0").GetCellData(2,4)

msgbox " value is." & x

Browser("S.O.S. Math – Mathematical").CloseAllTabs

Web link counter script

Dim Des_obj,link_col

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").Check CheckPoint("editorial » Blog Archive_2")

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").Link("Debt Consolidation").Click 48,5

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/debt-consolidation/debt-consolidation.php"

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive_2").Check CheckPoint("editorial » Blog Archive_3")

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive_2").Link("Debt Consolidation Calculator").Click 121,4

Browser("editorial » Blog Archive_2").Navigate "http://www.lendingtree.com/home-equity-loans/calculators/loan-consolidation-calculator"

Browser("editorial » Blog Archive_2").Navigate "http://www.lendingtree.com/home-equity-loans/calculators/loan-consolidation-calculator/"

Browser("editorial » Blog Archive_2").Back

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/debt-consolidation/debt-consolidation.php"

Browser("editorial » Blog Archive_2").Back

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/software/software-testing-life-cycle.php"

Set Des_obj = Description.Create

Des_obj("micclass").value = "Link"

Set link_col = Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").ChildObjects(Des_obj)

msgbox link_col.count

Browser("editorial » Blog Archive_2").CloseAllTabs

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").Check CheckPoint("editorial » Blog Archive_2")

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").Link("Debt Consolidation").Click 48,5

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/debt-consolidation/debt-consolidation.php"

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive_2").Check CheckPoint("editorial » Blog Archive_3")

Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive_2").Link("Debt Consolidation Calculator").Click 121,4

Browser("editorial » Blog Archive_2").Navigate "http://www.lendingtree.com/home-equity-loans/calculators/loan-consolidation-calculator"

Browser("editorial » Blog Archive_2").Navigate "http://www.lendingtree.com/home-equity-loans/calculators/loan-consolidation-calculator/"

Browser("editorial » Blog Archive_2").Back

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/debt-consolidation/debt-consolidation.php"

Browser("editorial » Blog Archive_2").Back

Browser("editorial » Blog Archive_2").Navigate "http://editorial.co.in/software/software-testing-life-cycle.php"

Set Des_obj = Description.Create

Des_obj("micclass").value = "Link"

Set link_col = Browser("editorial » Blog Archive_2").Page("editorial » Blog Archive").ChildObjects(Des_obj)

msgbox link_col.count

Browser("editorial » Blog Archive_2").CloseAllTabs

Find Tooltips of the specific website

Dim descImage,listImages,attrAltText,attrSrcText

Browser("Browser_2").Navigate "http://www.medusind.com/"

Browser("US Healthcare Revenue").Page("US Healthcare Revenue").Image("Medusind Solutions - Enabling").Click 150,9

Browser("Browser_2").Navigate "http://www.medusind.com/index.asp"

set descImage = description.create

descImage("html tag").value = "IMG"

SET listImages = Browser("Webpage error").page("US Healthcare Revenue").childobjects(descImage)

For i=0 to listimages.count-1

attrAltText = ListImages(i).GetRoProperty("alt")

attrrcText = listImages(i).GetRopRoperty("src")

If attrAltText <> "" Then

Msgbox "Images src: " & attrSrcText & vbnewline & "Tooltip: " & attrAltText

End If

Next

Browser("Webpage error").CloseAllTabs

Browser("Browser_2").Navigate "http://www.medusind.com/"

Browser("US Healthcare Revenue").Page("US Healthcare Revenue").Image("Medusind Solutions - Enabling").Click 150,9

Browser("Browser_2").Navigate "http://www.medusind.com/index.asp"

set descImage = description.create

descImage("html tag").value = "IMG"

SET listImages = Browser("Webpage error").page("US Healthcare Revenue").childobjects(descImage)

For i=0 to listimages.count-1

attrAltText = ListImages(i).GetRoProperty("alt")

attrrcText = listImages(i).GetRopRoperty("src")

If attrAltText <> "" Then

Msgbox "Images src: " & attrSrcText & vbnewline & "Tooltip: " & attrAltText

End If

Next

Browser("Webpage error").CloseAllTabs

message window validation

If not dialog( "Login").Exist(2)Then

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

End If

Dialog("Login").Activate

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e2e616befbf25362991054e9261351ead9a"

Dialog("Login").WinButton("OK").Click

Dialog("Flight Reservations").WinButton("OK").Click

Dialog("Login").WinButton("Help").Click

Dialog("Flight Reservations").Static("The password is 'MERCURY'").Check CheckPoint("The password is 'MERCURY'")

message = Dialog("text:= Login").dialog("text:= Flight Reservations").Static("window id:= 65535").GetROProperty("text")

Dialog("Flight Reservations").WinButton("OK").Click

If message = "The password is 'MERCURY'" Then

reporter.ReportEvent 0,"Res","Correct message" & message

else

reporter.ReportEvent 1,"Res","Incorrect message"

End If

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

End If

Dialog("Login").Activate

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e2e616befbf25362991054e9261351ead9a"

Dialog("Login").WinButton("OK").Click

Dialog("Flight Reservations").WinButton("OK").Click

Dialog("Login").WinButton("Help").Click

Dialog("Flight Reservations").Static("The password is 'MERCURY'").Check CheckPoint("The password is 'MERCURY'")

message = Dialog("text:= Login").dialog("text:= Flight Reservations").Static("window id:= 65535").GetROProperty("text")

Dialog("Flight Reservations").WinButton("OK").Click

If message = "The password is 'MERCURY'" Then

reporter.ReportEvent 0,"Res","Correct message" & message

else

reporter.ReportEvent 1,"Res","Incorrect message"

End If

Check Checkbox using Descriptive programming

Option explicit

Dim qtp,flight_app,f,t,i,j,x,y

If not Window("text:= Flight Reservation:").Exist (2) = true Then

qtp = Environment("ProductDir")

Flight_app = "\samples\flight\app\flight4a.exe"

SystemUtil.Run qtp & Flight_app

Dialog("text:= Login").Activate

Dialog("text:= Login").WinEdit("attached text:= Agent Name:").Set "asdf"

Dialog("text:= Login").WinEdit("attached text:= Password:").SetSecure "4e2d605c46a3b5d32706b9ea1735d00e79319dd2"

Dialog("text:= Login").WinButton("text:= OK").Click

End If

Window("text:= Flight Reservation").Activate

Window("text:= Flight Reservation").Activex("Acx_name:= MaskEdBox","window id:=0").Type "121212"

f = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly From:").GetItemsCount

For i= 0 to f-1 step 1

Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").Select(i)

x =Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").GetROProperty("text")

t = Window("text:=Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetItemsCount

For J = 0 TO t-1 step 1

Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").Select(j)

y = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetROProperty("text")

If x <> y Then

Reporter.ReportEvent 0,"Res","Test passed"

else

Reporter.ReportEvent 1,"Res","Test Failed"

End If

Next

Next

Dim qtp,flight_app,f,t,i,j,x,y

If not Window("text:= Flight Reservation:").Exist (2) = true Then

qtp = Environment("ProductDir")

Flight_app = "\samples\flight\app\flight4a.exe"

SystemUtil.Run qtp & Flight_app

Dialog("text:= Login").Activate

Dialog("text:= Login").WinEdit("attached text:= Agent Name:").Set "asdf"

Dialog("text:= Login").WinEdit("attached text:= Password:").SetSecure "4e2d605c46a3b5d32706b9ea1735d00e79319dd2"

Dialog("text:= Login").WinButton("text:= OK").Click

End If

Window("text:= Flight Reservation").Activate

Window("text:= Flight Reservation").Activex("Acx_name:= MaskEdBox","window id:=0").Type "121212"

f = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly From:").GetItemsCount

For i= 0 to f-1 step 1

Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").Select(i)

x =Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").GetROProperty("text")

t = Window("text:=Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetItemsCount

For J = 0 TO t-1 step 1

Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").Select(j)

y = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetROProperty("text")

If x <> y Then

Reporter.ReportEvent 0,"Res","Test passed"

else

Reporter.ReportEvent 1,"Res","Test Failed"

End If

Next

Next

Getting dynamic text from the webpage by using text output value function

Step 1: Open google and type as site: motevich.blogspot.com QTP

Step 2: Click search button

Step 3: get a searched result

Step 4: see below google search bar we can view no of results displayed like 1000 or 2000

Step 5: now we can capture that no of values only

Step 6: now just change as test instead of QTP

Step 7: Click search button

Step 8: get a searched result

Step 9: now we can get someother result like 3000 or 4000

Step 10: Stop recording

Step 11: Go to active screen and select the value what we want to get and right click that

Step 12: just click text output value ,screen will open

Step 13: Make some changes in that window

Step 14: click ok

Step 15: again run the script

Browser("Google").Page("Google").WebEdit("q").Set "site: motevich.blogspot.com QTP"

Browser("Google").Page("Google").WebButton("Google Search").Click

Browser("Google").Page("site: motevich.blogspot.com").Sync

Browser("Google").Page("Google").Output CheckPoint("ResCount_2")

msgbox Datatable.Value("count")

Browser("Google").CloseAllTabs

Step 2: Click search button

Step 3: get a searched result

Step 4: see below google search bar we can view no of results displayed like 1000 or 2000

Step 5: now we can capture that no of values only

Step 6: now just change as test instead of QTP

Step 7: Click search button

Step 8: get a searched result

Step 9: now we can get someother result like 3000 or 4000

Step 10: Stop recording

Step 11: Go to active screen and select the value what we want to get and right click that

Step 12: just click text output value ,screen will open

Step 13: Make some changes in that window

Step 14: click ok

Step 15: again run the script

Browser("Google").Page("Google").WebEdit("q").Set "site: motevich.blogspot.com QTP"

Browser("Google").Page("Google").WebButton("Google Search").Click

Browser("Google").Page("site: motevich.blogspot.com").Sync

Browser("Google").Page("Google").Output CheckPoint("ResCount_2")

msgbox Datatable.Value("count")

Browser("Google").CloseAllTabs

Verify the Check point and if check point is true or false further process is handled using functional statement

Dim Str

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e2558dc476c11a2ea3597e2545811aef6477598"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").WinComboBox("Fly To:").Check CheckPoint("Fly To:_2")

Str = Window("Flight Reservation").WinComboBox("Fly To:").Check (CheckPoint("Fly To:"))

msgbox (Str)

If Str = true Then

process()

Else

exitaction()

End If

Private Function process()

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").Dialog("Flight Reservations").WinButton("OK").Click

exitaction()

End Function

Private Function exitaction()

Window("Flight Reservation").Close

End Function

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e2558dc476c11a2ea3597e2545811aef6477598"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").WinComboBox("Fly To:").Check CheckPoint("Fly To:_2")

Str = Window("Flight Reservation").WinComboBox("Fly To:").Check (CheckPoint("Fly To:"))

msgbox (Str)

If Str = true Then

process()

Else

exitaction()

End If

Private Function process()

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").Dialog("Flight Reservations").WinButton("OK").Click

exitaction()

End Function

Private Function exitaction()

Window("Flight Reservation").Close

End Function

Open Various Application using vb script

Dim wsh

Public Function Launch_App(arg1)

Set wsh=CreateObject("wscript.shell")

wsh.run arg1

Set wsh=nothing

End Function

Call Launch_App("Notepad.exe")

wait 1

Call Launch_App("cmd.exe")

wait 1

Call Launch_App("www.google.com")

wait 1

Call Launch_App("calc.exe")

Public Function Launch_App(arg1)

Set wsh=CreateObject("wscript.shell")

wsh.run arg1

Set wsh=nothing

End Function

Call Launch_App("Notepad.exe")

wait 1

Call Launch_App("cmd.exe")

wait 1

Call Launch_App("www.google.com")

wait 1

Call Launch_App("calc.exe")

Run Multiple QTP Test Script using command prompt and display results in command prompt itself

Dim APP //Declaration

Set APP = CreateObject("QuickTest.Application") //create Application object

App.Launch //Start Quicktestapplication

App.Visible = True //make QTP Application visible

Dim QTP_Tests(3) //Declaration

QTP_Tests(1) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\pagecheckpoint" //Set path

QTP_Tests(2) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\textcheckpoint" //Set path

QTP_Tests(3) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\Tooltip" //Set path

set res_obj = CreateObject("QuickTest.RunResultsOptions") //Create run result object

For i=1 to Ubound(QTP_Tests) //For loop running Multiple application

App.Open QTP_Tests(i),True

Set QTP_Test = App.Test

res_obj.ResultsLocation = Qtp_Tests(i) & "QTPResults"

Qtp_Test.Run res_obj,True

QTP_Test.close

Next

App.Quit //Quit the Application

Set res_obj = nothing //Release Result object

Set QTP_Test = nothing // Release the test object

Set App = nothing // Release the application object

Set APP = CreateObject("QuickTest.Application") //create Application object

App.Launch //Start Quicktestapplication

App.Visible = True //make QTP Application visible

Dim QTP_Tests(3) //Declaration

QTP_Tests(1) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\pagecheckpoint" //Set path

QTP_Tests(2) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\textcheckpoint" //Set path

QTP_Tests(3) = "C:\Users\perinbarajani\Documents\HP\QuickTest Professional\Tests\Tooltip" //Set path

set res_obj = CreateObject("QuickTest.RunResultsOptions") //Create run result object

For i=1 to Ubound(QTP_Tests) //For loop running Multiple application

App.Open QTP_Tests(i),True

Set QTP_Test = App.Test

res_obj.ResultsLocation = Qtp_Tests(i) & "QTPResults"

Qtp_Test.Run res_obj,True

QTP_Test.close

Next

App.Quit //Quit the Application

Set res_obj = nothing //Release Result object

Set QTP_Test = nothing // Release the test object

Set App = nothing // Release the application object

Wednesday, September 7, 2011

Bugs, defects and issues

This article explains the terminologies like bugs, defects and issues

All these three terms means the same. It all represents a problem in software.

Background of Bug

In 1946, a huge electromechanical computer stopped functioning suddenly. Operators traced that a bug was trapped in it's relay unit causing the problem. They fixed the problem by removing the bug. Software "bug tracking" and "bug fixing" was evolved from this!

Below is the picture of the first real bug reported:

For several years, the term "bug" and "defect" were widely used in software develop process to indicate problems in software. However, software engineers started questioning the terms "bugs" and "defects" because in many cases they argue that certain "bug" is not a bug, but it is a "feature" or "it is how customer originally asked for it". To avoid conflicts between testing and development team, several companies are now using a different term - "software issue".

Even though both "issue" and "bug" indicate some kind of problems in software, developers feel the term "issue" is less offensive than "bug" ! This is because, the bug directly indicate a problem in the code he wrote, while "issue" is a term which indicates any kind of general problems in the software including wrong requirement, bad design etc.

Whatever problems the QA or testing team find, they will call it as an "issue". An issue may or may not be a bug. A tester may call a feature as a "issue" because that is not what the customer wants, even though it is a nice feature. Or, the software is not delivered to QA team on the date planned, it can be reported as an "issue".

The tester reports issues and his role ends there. It is the job of the product manager to decide whether to solve the issue and how to solve it. Depending on the nature of the issue, the product manager assigns it to the appropriate team to resolve. Product manager may even decide to "waive the issue" if he feels that it is not a problem. If the issue is a bug, then it will be assigned to the developers and they will fix the code. When the bug is fixed, the testing team will re test the software and verify it. If the issues is fixed, then the status of the issue will be changed to "closed".

An issue can be resolved in different ways, depending on it's nature. If it is a software bug, it goes to the developer to correct the code and program. If it is due to wrong requirement, it goes to the customer or marketing to correct the requirement. If the issue was caused by bad configuration in the testing computer, it will be assigned to the appropriate hardware representative to correct the configuration problem.

Software developers like the term "issue" rather than "bug" because the term "issue" does not really indicate that there is a problem in their code ! The term "issue" is becoming the standard in software testing process to indicate problems in software.

All these three terms means the same. It all represents a problem in software.

Background of Bug

In 1946, a huge electromechanical computer stopped functioning suddenly. Operators traced that a bug was trapped in it's relay unit causing the problem. They fixed the problem by removing the bug. Software "bug tracking" and "bug fixing" was evolved from this!

Below is the picture of the first real bug reported:

For several years, the term "bug" and "defect" were widely used in software develop process to indicate problems in software. However, software engineers started questioning the terms "bugs" and "defects" because in many cases they argue that certain "bug" is not a bug, but it is a "feature" or "it is how customer originally asked for it". To avoid conflicts between testing and development team, several companies are now using a different term - "software issue".

Even though both "issue" and "bug" indicate some kind of problems in software, developers feel the term "issue" is less offensive than "bug" ! This is because, the bug directly indicate a problem in the code he wrote, while "issue" is a term which indicates any kind of general problems in the software including wrong requirement, bad design etc.

Whatever problems the QA or testing team find, they will call it as an "issue". An issue may or may not be a bug. A tester may call a feature as a "issue" because that is not what the customer wants, even though it is a nice feature. Or, the software is not delivered to QA team on the date planned, it can be reported as an "issue".

The tester reports issues and his role ends there. It is the job of the product manager to decide whether to solve the issue and how to solve it. Depending on the nature of the issue, the product manager assigns it to the appropriate team to resolve. Product manager may even decide to "waive the issue" if he feels that it is not a problem. If the issue is a bug, then it will be assigned to the developers and they will fix the code. When the bug is fixed, the testing team will re test the software and verify it. If the issues is fixed, then the status of the issue will be changed to "closed".

An issue can be resolved in different ways, depending on it's nature. If it is a software bug, it goes to the developer to correct the code and program. If it is due to wrong requirement, it goes to the customer or marketing to correct the requirement. If the issue was caused by bad configuration in the testing computer, it will be assigned to the appropriate hardware representative to correct the configuration problem.

Software developers like the term "issue" rather than "bug" because the term "issue" does not really indicate that there is a problem in their code ! The term "issue" is becoming the standard in software testing process to indicate problems in software.

Monday, August 29, 2011

Check Checkbox using Descriptive programming

Option explicit

Dim qtp,flight_app,f,t,i,j,x,y

If not Window("text:= Flight Reservation:").Exist (2) = true Then

qtp = Environment("ProductDir")

Flight_app = "\samples\flight\app\flight4a.exe"

SystemUtil.Run qtp & Flight_app

Dialog("text:= Login").Activate

Dialog("text:= Login").WinEdit("attached text:= Agent Name:").Set "asdf"

Dialog("text:= Login").WinEdit("attached text:= Password:").SetSecure "4e2d605c46a3b5d32706b9ea1735d00e79319dd2"

Dialog("text:= Login").WinButton("text:= OK").Click

End If

Window("text:= Flight Reservation").Activate

Window("text:= Flight Reservation").Activex("Acx_name:= MaskEdBox","window id:=0").Type "121212"

f = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly From:").GetItemsCount

For i= 0 to f-1 step 1

Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").Select(i)

x =Window("text:=Flight Reservation").WinComboBox("attached Text:= Fly From:").GetROProperty("text")

t = Window("text:=Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetItemsCount

For J = 0 TO t-1 step 1

Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").Select(j)

y = Window("text:= Flight Reservation").WinComboBox("attached text:= Fly To:","x:= 244","y:=143").GetROProperty("text")

If x <> y Then

Reporter.ReportEvent 0,"Res","Test passed"

else

Reporter.ReportEvent 1,"Res","Test Failed"

End If

Next

Next

Count Buttons of Flight reservation window

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0acd0c8d19e774cb573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0acd0c8d19e774cb573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Count Buttons of Flight reservation window

SystemUtil.Run "C:\Program Files\HP\QuickTest Professional\samples\flight\app\flight4a.exe","","C:\Program Files\HP\QuickTest Professional\samples\flight\app\",""

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0a573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog("Login").WinEdit("Password:").SetSecure "4e1ebb0beb016ff0a573"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").ActiveX("MaskEdBox").Type "121212"

Window("Flight Reservation").WinComboBox("Fly From:").Select "Denver"

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").WinButton("FLIGHT").Click

Window("Flight Reservation").Dialog("Flights Table").WinList("From").Select "20262 DEN 10:12 AM LON 05:23 PM AA $112.20"

Window("Flight Reservation").Dialog("Flights Table").WinButton("OK").Click

Window("Flight Reservation").WinEdit("Name:").Set "ranab"

Window("Flight Reservation").WinButton("Insert Order").Click

Call Count_Buttons()

Window("Flight Reservation").Close

write function in separate function window and add resource while running any script:

Function Count_Buttons()

Dim oButton,Buttons,ToButtons,i

Set oButton = Description.Create

oButton("Class Name").value = "WinButton"

Set Buttons = Window("text:= Flight Reservation").ChildObjects(oButton)

ToButtons = Buttons.count

msgbox ToButtons

End Function

Verify the Check point and if check point is true or false further process is handled using functional statement

Dim Str

Dialog("Login").WinEdit("Agent Name:").Set "rajan"

Dialog("Login").WinEdit("Agent Name:").Type micTab

Dialog"Login").WinEdit("Password:").SetSecure "4e2558dc476c1aef6477598"

Dialog("Login").WinButton("OK").Click

Window("Flight Reservation").WinComboBox("Fly To:").Check CheckPoint("Fly To:_2")

Str = Window("Flight Reservation").WinComboBox("Fly To:").Check (CheckPoint("Fly To:"))

msgbox (Str)

If Str = true Then

process()

Else

exitaction()

End If

Private Function process()

Window("Flight Reservation").WinComboBox("Fly To:").Select "London"

Window("Flight Reservation").Dialog("Flight Reservations").WinButton("OK").Click

exitaction()

End Function

Private Function exitaction()

Window("Flight Reservation").Close

End Function

Friday, August 19, 2011

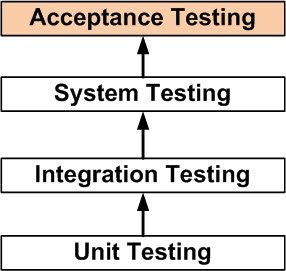

Acceptance Testing

DEFINITION

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

System Testing

DEFINITION

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

Integration Testing

DEFINITION

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Unit Testing

DEFINITION

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

when we have stop testing?

common factors in deciding when to stop are,

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Why we have to start testing early

Introduction :

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

Golden software testing rules

Introduction

Read these simple golden rules for software testing. They are based on years of practical testing

experience and solid theory.

Its all about finding the bug as early as possible:

Start software testing process as soon as you got the requirement specification document. Review the specification document carefully, get your queries resolved. With this you can easily find bugs in requirement document (otherwise development team might developed the software with wrong functionality) and many time this happens that requirement document gets changed when test team raise queries.

After requirement doc review, prepare scenarios and test cases.

Make sure you have atleast these 3 software testing levels

1. Integration testing or Unit testing (performed by dev team or separate white box testing team)

2. System testing (performed by professional testers)

3. Acceptance testing (performed by end users, sometimes Business Analyst and test leads assist end users)

Don’t expect too much of automated testing

Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and invalid solution. Consider - ROI.

Deal with resistance

Don't try to become popular by completing tasks before time and by loosing quality. Some testers do this and get appreciation from managers in early project cycles. But you should stick to the quality and perform quality testing. If you really tested the application fully, then you can go with numbers (count of bus reported, test case prepared, etc). Definitely your project partners will appreciate the great job you're doing!

Do regression testing every new release:

Once the development team is done with the bug fixes and give release to testing team, apart from the bug fixes, testing team should perform the regression testing as well. In early test cycles regression testing of entire application is required. In late testing cycles, when application is near UAT, discuss the impact of bug fixes with deal team and test the functionality as per that

Test With Real data:

Apart from invalid data entry, testers must test the application with real data. for this help can be taken from Business analyst and Client.

You can take help like these sites - http://www.fakenamegenerator.com/. But when it comes to Finance domain, request client for sample data, coz there can data like - $10.87 Million etc.

Keep track of change requests