Different Message types should be displayed differently. I came across one website and I got to know that every webapplication and windows based application handle 4 main message types such as Information,Successful Operation, Warning Message and Error message. Each message type should be presented in a different color and different icon. A special message type represents Validation messages.

1.Information Messages

The purpose of information messages is to inform the user about something relevant. This should be presented in blue because people associate this color with information, Regardless of content. This could be any information relevant to a user action

Informational Messages

For example, info message can show help information regarding current user (Or) some tips.

2.Success messages

Success messages should be displayed after user successful performs an operation. By that I mean a complete operation – no partial operations and no errors. For example, the messagecan say: “Your profile has been saved successfully and confirmation mail has been sent to

email address you provided”. This means that each operation in this process ( saving profile and sending email) has been successfully performed.

Success Messages

To show this message type using its own colors and icons – green with a check mark icon

3.Warning Messages

Warning messages should be displayed to a user when an operation could not completed in a whole .For example “Your profile has been saved successfully,But But confirmation mail could not be sent to the email address you provided.” Or “If you dont finish your Profile now you wont be able to search jobs”. Usual warning color is yellow and icon exclamation.

4.Error messages

Error messages should be displayed when an operation could not be completed at all.For example , “Red is very suitable for this since people associate this color with an alert of any kind.

5. Validation Messages

This Article author noticed that many developers cant distinguish between validation and other message types ( such as error or warning messages). I saw many times that validation message is displayed as error message and caused confusion in the users's mind.

Validation is all about user input and should be treated that way. ASP.NET has built in controls that enable full control over user input. The purpose of validation is to force a user to enter all required fields or to enter in the correct format. Therefore it should be clear that the form will not be submitted if these rules are not matched.

That's why I like to style validation messages in a slightly less intensive red than error messages and use a red exclamation icon.

Showing posts with label Testing Concepts. Show all posts

Showing posts with label Testing Concepts. Show all posts

Monday, December 19, 2011

Tuesday, September 27, 2011

Ten Tips for effective bug tracking

1.Remember that the only person who can close a bug is the person who findout first.Anyone can resolve it,but only the person who saw the bug can really be sure that when they saw is fixed.

2.A good tester will always try to reduce the reproduction steps to the minimal steps to reproduce.this is extremely helpful for the programmer who has to find the bug.

3.There are many ways to resolve a bug. Developer can resolve a bug as fixed,wont fix,postponed,not pro,duplicate or by design

4.You will want to keep careful track of versions. Every build of the sofware that you give to testers should have a build ID Number so that the poor tester doesnt have to retest the bug on a version of the software where it wasnot even supposed to be fixed.

5.Not reproduce means that nobody could ever reproduce the bug. Programmers often use this when the bug report is missing the reproduce steps.

6.If yoy are a programmer and you are having trouble getting testers to use the bug database, just dont accept bug reports by any other method. If your testers are used to sending you email with bug reports, just bounce the emails back to them with a brief message: “ please put this in the bug database. I cant keep track of emails.

7.If you are a tester, and you are having trouble getting programmers to use the bug database, just dont tell them about bugs – put them in the database and let the database email them.

8.If your are a programmer, and only some of your colleagues use the bug database, just start assigning them bugs in the database. Eventually they will get the hint.

9.If you are a manager, and nobody seems to be using the bug database that you installed at great expense, start assigning new features to people using bug. A bug database is alos a great “unimplemented feature” database, too.

10.Avoid the temptation to add new fields to the bug database.Every month or so, somebody will come up with a great idea for a new field to put in the database. You get all kinds of clever ideas, for example, keeping track of file where the bug was found; keeping track of what % of the time the bug is reproducible; keeping track of how many times the bug occured ; keeping track of which exact versions of which DLLs were installed on the machine where the bug happend. Its very important not to give in to these ideas. If you do , your new bug entry screen will end up with a thousand fields that you need to supply, and nobody will want to input bug reports any more. For the bug database to work, everybody needs to use it, and if entering bugs “formally” is too mush work, people will go around the bug database.

2.A good tester will always try to reduce the reproduction steps to the minimal steps to reproduce.this is extremely helpful for the programmer who has to find the bug.

3.There are many ways to resolve a bug. Developer can resolve a bug as fixed,wont fix,postponed,not pro,duplicate or by design

4.You will want to keep careful track of versions. Every build of the sofware that you give to testers should have a build ID Number so that the poor tester doesnt have to retest the bug on a version of the software where it wasnot even supposed to be fixed.

5.Not reproduce means that nobody could ever reproduce the bug. Programmers often use this when the bug report is missing the reproduce steps.

6.If yoy are a programmer and you are having trouble getting testers to use the bug database, just dont accept bug reports by any other method. If your testers are used to sending you email with bug reports, just bounce the emails back to them with a brief message: “ please put this in the bug database. I cant keep track of emails.

7.If you are a tester, and you are having trouble getting programmers to use the bug database, just dont tell them about bugs – put them in the database and let the database email them.

8.If your are a programmer, and only some of your colleagues use the bug database, just start assigning them bugs in the database. Eventually they will get the hint.

9.If you are a manager, and nobody seems to be using the bug database that you installed at great expense, start assigning new features to people using bug. A bug database is alos a great “unimplemented feature” database, too.

10.Avoid the temptation to add new fields to the bug database.Every month or so, somebody will come up with a great idea for a new field to put in the database. You get all kinds of clever ideas, for example, keeping track of file where the bug was found; keeping track of what % of the time the bug is reproducible; keeping track of how many times the bug occured ; keeping track of which exact versions of which DLLs were installed on the machine where the bug happend. Its very important not to give in to these ideas. If you do , your new bug entry screen will end up with a thousand fields that you need to supply, and nobody will want to input bug reports any more. For the bug database to work, everybody needs to use it, and if entering bugs “formally” is too mush work, people will go around the bug database.

Friday, August 19, 2011

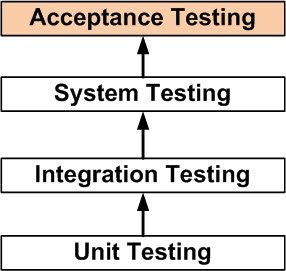

Acceptance Testing

DEFINITION

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

Acceptance Testing is a level of the software testing process where a system is tested for acceptability.

The purpose of this test is to evaluate the system’s compliance with the business requirements and assess whether it is acceptable for delivery.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed. Once the System Testing is complete, Acceptance Testing is performed so as to confirm that the ballpoint pen is ready to be made available to the end-users.

METHOD

Usually, Black Box Testing method is used in Acceptance Testing.

Testing does not usually follow a strict procedure and is not scripted but is rather ad-hoc.

TASKS

Acceptance Test Plan

Prepare

Review

Rework

Baseline

Acceptance Test Cases/Checklist

Prepare

Review

Rework

Baseline

Acceptance Test

Perform

When is it performed?

Acceptance Testing is performed after System Testing and before making the system available for actual use.

Who performs it?

Internal Acceptance Testing (Also known as Alpha Testing) is performed by members of the organization that developed the software but who are not directly involved in the project (Development or Testing). Usually, it is the members of Product Management, Sales and/or Customer Support.

External Acceptance Testing is performed by people who are not employees of the organization that developed the software.

Customer Acceptance Testing is performed by the customers of the organization that developed the software. They are the ones who asked the organization to develop the software for them. [This is in the case of the software not being owned by the organization that developed it.]

User Acceptance Testing (Also known as Beta Testing) is performed by the end users of the software. They can be the customers themselves or the customers’ customers.

Definition by ISTQB

acceptance testing: Formal testing with respect to user needs, requirements, and business

processes conducted to determine whether or not a system satisfies the acceptance criteria

and to enable the user, customers or other authorized entity to determine whether or not to

accept the system.

System Testing

DEFINITION

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

System Testing is a level of the software testing process where a complete, integrated system/software is tested.

The purpose of this test is to evaluate the system’s compliance with the specified requirements.

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. When the complete pen is integrated, System Testing is performed.

METHOD

Usually, Black Box Testing method is used.

TASKS

System Test Plan

Prepare

Review

Rework

Baseline

System Test Cases

Prepare

Review

Rework

Baseline

System Test

Perform

When is it performed?

System Testing is performed after Integration Testing and before Acceptance Testing.

Who performs it?

Normally, independent Testers perform System Testing.

Definition by ISTQB

system testing: The process of testing an integrated system to verify that it meets specified

requirements.

Integration Testing

DEFINITION

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Integration Testing is a level of the software testing process where individual units are combined and tested as a group.

The purpose of this level of testing is to expose faults in the interaction between integrated units.

Test drivers and test stubs are used to assist in Integration Testing.

Note: The definition of a unit is debatable and it could mean any of the following:

the smallest testable part of a software

a ‘module’ which could consist of many of ‘1’

a ‘component’ which could consist of many of ’2′

ANALOGY

During the process of manufacturing a ballpoint pen, the cap, the body, the tail and clip, the ink cartridge and the ballpoint are produced separately and unit tested separately. When two or more units are ready, they are assembled and Integration Testing is performed. For example, whether the cap fits into the body or not.

METHOD

Any of Black Box Testing, White Box Testing, and Gray Box Testing methods can be used. Normally, the method depends on your definition of ‘unit’.

TASKS

Integration Test Plan

Prepare

Review

Rework

Baseline

Integration Test Cases/Scripts

Prepare

Review

Rework

Baseline

Integration Test

Perform

When is Integration Testing performed?

Integration Testing is performed after Unit Testing and before System Testing.

Who performs Integration Testing?

Either Developers themselves or independent Testers perform Integration Testing.

APPROACHES

Big Bang is an approach to Integration Testing where all or most of the units are combined together and tested at one go. This approach is taken when the testing team receives the entire software in a bundle. So what is the difference between Big Bang Integration Testing and System Testing? Well, the former tests only the interactions between the units while the latter tests the entire system.

Top Down is an approach to Integration Testing where top level units are tested first and lower level units are tested step by step after that. This approach is taken when top down development approach is followed. Test Stubs are needed to simulate lower level units which may not be available during the initial phases.

Bottom Up is an approach to Integration Testing where bottom level units are tested first and upper level units step by step after that. This approach is taken when bottom up development approach is followed. Test Drivers are needed to simulate higher level units which may not be available during the initial phases.

Sandwich/Hybrid is an approach to Integration Testing which is a combination of Top Down and Bottom Up approaches.

TIPS

Ensure that you have a proper Detail Design document where interactions between each unit are clearly defined. In fact, you will not be able to perform Integration Testing without this information.

Ensure that you have a robust Software Configuration Management system in place. Or else, you will have a tough time tracking the right version of each unit, especially if the number of units to be integrated is huge.

Make sure that each unit is first unit tested before you start Integration Testing.

As far as possible, automate your tests, especially when you use the Top Down or Bottom Up approach, since regression testing is important each time you integrate a unit, and manual regression testing can be inefficient.

Definition by ISTQB

integration testing: Testing performed to expose defects in the interfaces and in the

interactions between integrated components or systems. See also component integration

testing, system integration testing.

component integration testing: Testing performed to expose defects in the interfaces and

interaction between integrated components.

system integration testing: Testing the integration of systems and packages; testing

interfaces to external organizations (e.g. Electronic Data Interchange, Internet).

Unit Testing

DEFINITION

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.)

Unit testing frameworks, drivers, stubs and mock or fake objects are used to assist in unit testing.

METHOD

Unit Testing is performed by using the White Box Testing method.

When is it performed?

Unit Testing is the first level of testing and is performed prior to Integration Testing.

Who performs it?

Unit Testing is normally performed by software developers themselves or their peers. In rare cases it may also be performed by independent software testers.

TASKS

Unit Test Plan

Prepare

Review

Rework

Baseline

Unit Test Cases/Scripts

Prepare

Review

Rework

Baseline

Unit Test

Perform

BENEFITS

Unit testing increases confidence in changing/maintaining code. If good unit tests are written and if they are run every time any code is changed, the likelihood of any defects due to the change being promptly caught is very high. If unit testing is not in place, the most one can do is hope for the best and wait till the test results at higher levels of testing are out. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less.

Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse.

Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) In case you have unit testing in place, you write the test, code and run the tests. Writing tests takes time but the time is compensated by the time it takes to run the tests. The test runs take very less time: You need not fire up the GUI and provide all those inputs. And, of course, unit tests are more reliable than ‘developer tests’. Development is faster in the long run too. How? The effort required to find and fix defects found during unit testing is peanuts in comparison to those found during system testing or acceptance testing.

The cost of fixing a defect detected during unit testing is lesser in comparison to that of defects detected at higher levels. Compare the cost (time, effort, destruction, humiliation) of a defect detected during acceptance testing or say when the software is live.

Debugging is easy. When a test fails, only the latest changes need to be debugged. With testing at higher levels, changes made over the span of several days/weeks/months need to be debugged.

Codes are more reliable. Why? I think there is no need to explain this to a sane person.

TIPS

Find a tool/framework for your language.

Do not create test cases for ‘everything’: some will be handled by ‘themselves’. Instead, focus on the tests that impact the behavior of the system.

Isolate the development environment from the test environment.

Use test data that is close to that of production.

Before fixing a defect, write a test that exposes the defect. Why? First, you will later be able to catch the defect if you do not fix it properly. Second, your test suite is now more comprehensive. Third, you will most probably be too lazy to write the test after you have already ‘fixed’ the defect.

Write test cases that are independent of each other. For example if a class depends on a database, do not write a case that interacts with the database to test the class. Instead, create an abstract interface around that database connection and implement that interface with mock object.

Aim at covering all paths through the unit. Pay particular attention to loop conditions.

Make sure you are using a version control system to keep track of your code as well as your test cases.

In addition to writing cases to verify the behavior, write cases to ensure performance of the code.

Perform unit tests continuously and frequently.

ONE MORE REASON

Lets say you have a program comprising of two units. The only test you perform is system testing. [You skip unit and integration testing.] During testing, you find a bug. Now, how will you determine the cause of the problem?

Is the bug due to an error in unit 1?

Is the bug due to an error in unit 2?

Is the bug due to errors in both units?

Is the bug due to an error in the interface between the units?

Is the bug due to an error in the test or test case?

Unit testing is often neglected but it is, in fact, the most important level of testing.

when we have stop testing?

common factors in deciding when to stop are,

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Deadlines(release deadlines,testing deadlines,etc.)

Testcases completed with certain percentage passed.

Test budget depleted.

Coverage of code/functionality/Requirements reaches a specified point.

Bug rate falls below a certain level.

Beta or alpha testing period ends.

Why we have to start testing early

Introduction :

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

You probably heard and read in blogs “Testing should start early in the life cycle of development". In this chapter, we will discuss Why start testing Early? very practically.

Fact One

Let’s start with the regular software development life cycle:

First we’ve got a planning phase: needs are expressed, people are contacted, meetings are booked. Then the decision is made: we are going to do this project.

After that analysis will be done, followed by code build.

Now it’s your turn: you can start testing.

Do you think this is what is going to happen? Dream on.

This is what's going to happen:

Planning, analysis and code build will take more time then planned.

That would not be a problem if the total project time would pro-longer. Forget it; it is most likely that you are going to deal with the fact that you will have to perform the tests in a few days.

The deadline is not going to be moved at all: promises have been made to customers, project managers are going to lose their bonuses if they deliver later past deadline.

Fact Two

The earlier you find a bug, the cheaper it is to fix it.

If you are able to find the bug in the requirements determination, it is going to be 50 times cheaper

(!!) than when you find the same bug in testing.

It will even be 100 times cheaper (!!) than when you find the bug after going live.

Easy to understand: if you find the bug in the requirements definitions, all you have to do is change the text of the requirements. If you find the same bug in final testing, analysis and code build already took place. Much more effort is done to build something that nobody wanted.

Conclusion: start testing early!

This is what you should do:

Golden software testing rules

Introduction

Read these simple golden rules for software testing. They are based on years of practical testing

experience and solid theory.

Its all about finding the bug as early as possible:

Start software testing process as soon as you got the requirement specification document. Review the specification document carefully, get your queries resolved. With this you can easily find bugs in requirement document (otherwise development team might developed the software with wrong functionality) and many time this happens that requirement document gets changed when test team raise queries.

After requirement doc review, prepare scenarios and test cases.

Make sure you have atleast these 3 software testing levels

1. Integration testing or Unit testing (performed by dev team or separate white box testing team)

2. System testing (performed by professional testers)

3. Acceptance testing (performed by end users, sometimes Business Analyst and test leads assist end users)

Don’t expect too much of automated testing

Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and invalid solution. Consider - ROI.

Deal with resistance

Don't try to become popular by completing tasks before time and by loosing quality. Some testers do this and get appreciation from managers in early project cycles. But you should stick to the quality and perform quality testing. If you really tested the application fully, then you can go with numbers (count of bus reported, test case prepared, etc). Definitely your project partners will appreciate the great job you're doing!

Do regression testing every new release:

Once the development team is done with the bug fixes and give release to testing team, apart from the bug fixes, testing team should perform the regression testing as well. In early test cycles regression testing of entire application is required. In late testing cycles, when application is near UAT, discuss the impact of bug fixes with deal team and test the functionality as per that

Test With Real data:

Apart from invalid data entry, testers must test the application with real data. for this help can be taken from Business analyst and Client.

You can take help like these sites - http://www.fakenamegenerator.com/. But when it comes to Finance domain, request client for sample data, coz there can data like - $10.87 Million etc.

Keep track of change requests

Sometimes, in later test cycles everyone in the project become so busy and didn't get time to document the change requests. So in this situation, for testers (Test leads) I suggest to keep track of change requests (which happens thru email communication) in a separate excel document.

Also give the change requests a priority status:

Show stopper (must have, no work around)

Major (must have, work around possible)

Minor (not business critical, but wanted)

Nice to have

Actively use these above statuses for reporting and follow up!

Note - In CMMi or in process oriented companies, there are already change request management (configuration management systems)

Don't be a Quality Police:

Let Business analyst and technical managers to decide what bugs need to be fixed. Definetely testers can give inputs to them why this fix is required.

'Impact' and 'Chance' are the keys to decide on risk and priority

You should keep a helicopter view on your project. For each part of your application you have to

define the 'impact' and the 'chance' of anything going wrong.

'Impact' being what happens if a certain situation occurs.

What’s the impact of an airplane crashing?

'Chance' is the likelihood that something happens.

What’s the chance to an airplane crash?

Delivery to client:

Once the final testing cycle is completed or when the application is going for UAT, Test lead should discuss that these many bugs still persists (with priority) and let Technical manager (dev team), Product manager and business analyst to decide whether application needs to be deliver or not. Definetely testers can give inputs to them on the OPENED bugs.

Focus on the software testing process, not on the tools

Teta management and other testing tools make our tasks easy but these tools cannot perform testing. So instead of focusing on tools, focus on core software testing. You can be very successful by using basic tools like MS Excel.

Read these simple golden rules for software testing. They are based on years of practical testing

experience and solid theory.

Its all about finding the bug as early as possible:

Start software testing process as soon as you got the requirement specification document. Review the specification document carefully, get your queries resolved. With this you can easily find bugs in requirement document (otherwise development team might developed the software with wrong functionality) and many time this happens that requirement document gets changed when test team raise queries.

After requirement doc review, prepare scenarios and test cases.

Make sure you have atleast these 3 software testing levels

1. Integration testing or Unit testing (performed by dev team or separate white box testing team)

2. System testing (performed by professional testers)

3. Acceptance testing (performed by end users, sometimes Business Analyst and test leads assist end users)

Don’t expect too much of automated testing

Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and invalid solution. Consider - ROI.

Deal with resistance

Don't try to become popular by completing tasks before time and by loosing quality. Some testers do this and get appreciation from managers in early project cycles. But you should stick to the quality and perform quality testing. If you really tested the application fully, then you can go with numbers (count of bus reported, test case prepared, etc). Definitely your project partners will appreciate the great job you're doing!

Do regression testing every new release:

Once the development team is done with the bug fixes and give release to testing team, apart from the bug fixes, testing team should perform the regression testing as well. In early test cycles regression testing of entire application is required. In late testing cycles, when application is near UAT, discuss the impact of bug fixes with deal team and test the functionality as per that

Test With Real data:

Apart from invalid data entry, testers must test the application with real data. for this help can be taken from Business analyst and Client.

You can take help like these sites - http://www.fakenamegenerator.com/. But when it comes to Finance domain, request client for sample data, coz there can data like - $10.87 Million etc.

Keep track of change requests

Sometimes, in later test cycles everyone in the project become so busy and didn't get time to document the change requests. So in this situation, for testers (Test leads) I suggest to keep track of change requests (which happens thru email communication) in a separate excel document.

Also give the change requests a priority status:

Show stopper (must have, no work around)

Major (must have, work around possible)

Minor (not business critical, but wanted)

Nice to have

Actively use these above statuses for reporting and follow up!

Note - In CMMi or in process oriented companies, there are already change request management (configuration management systems)

Don't be a Quality Police:

Let Business analyst and technical managers to decide what bugs need to be fixed. Definetely testers can give inputs to them why this fix is required.

'Impact' and 'Chance' are the keys to decide on risk and priority

You should keep a helicopter view on your project. For each part of your application you have to

define the 'impact' and the 'chance' of anything going wrong.

'Impact' being what happens if a certain situation occurs.

What’s the impact of an airplane crashing?

'Chance' is the likelihood that something happens.

What’s the chance to an airplane crash?

Delivery to client:

Once the final testing cycle is completed or when the application is going for UAT, Test lead should discuss that these many bugs still persists (with priority) and let Technical manager (dev team), Product manager and business analyst to decide whether application needs to be deliver or not. Definetely testers can give inputs to them on the OPENED bugs.

Focus on the software testing process, not on the tools

Teta management and other testing tools make our tasks easy but these tools cannot perform testing. So instead of focusing on tools, focus on core software testing. You can be very successful by using basic tools like MS Excel.

Monday, May 9, 2011

Responsibilities of Test manager/Lead

1.Understand the testing effort by analyzing the requirements of project.

2.Estimate and obtain management support for the time,resources and budget required to perform the testing.

3.Organize the testing kick-off meeting

4.Define the Strategy

5.Build a testing team of professionals with appropriate skills, attitudes and motivation.

6.Identify Training requirements and forward it to the Project Manager (Technical and Soft skills).

7.Develop the test plan for the tasks, dependencies and participants required to mitigate the risks to system quality and obtain stakeholder support for this plan.

8.Arrange the Hardware and software requirement for the Test Setup.

9.Assign task to all Testing Team members and ensure that all of them have sufficient work in the project.

10.Ensure content and structure of all Testing documents / artifacts is documented and maintained.

11.Document, implement, monitor, and enforce all processes for testing as per standards defined by the organization.

12.Check / Review the Test Cases documents.

13.Keep track of the new requirements / change in requirements of the Project.

14.Escalate the issues about project requirements (Software, Hardware, Resources) to Project Manager / Sr. Test Manager.

15.Organize the status meetings and send the Status Report (Daily, Weekly etc.) to the Client.

16.Attend the regular client call and discuss the weekly status with the client.

17.Communication with the Client (If required).

18.Act as the single point of contact between Development and Testers.

19.Track and prepare the report of testing activities like test testing results, test case coverage, required resources, defects discovered and their status, performance baselines etc.

20.Review various reports prepared by Test engineers.

21.Ensure the timely delivery of different testing milestones.

22.Prepares / updates the metrics dashboard at the end of a phase or at the completion of project.

2.Estimate and obtain management support for the time,resources and budget required to perform the testing.

3.Organize the testing kick-off meeting

4.Define the Strategy

5.Build a testing team of professionals with appropriate skills, attitudes and motivation.

6.Identify Training requirements and forward it to the Project Manager (Technical and Soft skills).

7.Develop the test plan for the tasks, dependencies and participants required to mitigate the risks to system quality and obtain stakeholder support for this plan.

8.Arrange the Hardware and software requirement for the Test Setup.

9.Assign task to all Testing Team members and ensure that all of them have sufficient work in the project.

10.Ensure content and structure of all Testing documents / artifacts is documented and maintained.

11.Document, implement, monitor, and enforce all processes for testing as per standards defined by the organization.

12.Check / Review the Test Cases documents.

13.Keep track of the new requirements / change in requirements of the Project.

14.Escalate the issues about project requirements (Software, Hardware, Resources) to Project Manager / Sr. Test Manager.

15.Organize the status meetings and send the Status Report (Daily, Weekly etc.) to the Client.

16.Attend the regular client call and discuss the weekly status with the client.

17.Communication with the Client (If required).

18.Act as the single point of contact between Development and Testers.

19.Track and prepare the report of testing activities like test testing results, test case coverage, required resources, defects discovered and their status, performance baselines etc.

20.Review various reports prepared by Test engineers.

21.Ensure the timely delivery of different testing milestones.

22.Prepares / updates the metrics dashboard at the end of a phase or at the completion of project.

Difference between high level and low level test cases?

High level testacases are those which covers major functionality in the application(i.e. Retrieve,update display,cancel(functionality related test cases), database test cases.)

Low level test cases are those related to user interface(UI) in the application.

Low level test cases are those related to user interface(UI) in the application.

What is Concurrency Testing

Concurrency Testing(also commonly known as muti user testing) is used to know the effects of accessing the application,code module or database by different users at the same time.

It helps in identifying and measuring the problems in response time, levels of locking and deadlocking in the application.

Ex. Loadrunner is widely used for this type of testing,Vugen(Virtual user generator) is used to add the number of concurrent users and how the users need to be added like gradual rampup or spike stepped.

It helps in identifying and measuring the problems in response time, levels of locking and deadlocking in the application.

Ex. Loadrunner is widely used for this type of testing,Vugen(Virtual user generator) is used to add the number of concurrent users and how the users need to be added like gradual rampup or spike stepped.

Entry and Exit criteria in software testing

The entry criteria is the process that must be present when a system begins like,

SRS

FRS

usecase

Test case

Test plan

The exit criteria ensures whether testing is complted and the application is ready for release like,

Test summary

Metrics

Defect analysis report

SRS

FRS

usecase

Test case

Test plan

The exit criteria ensures whether testing is complted and the application is ready for release like,

Test summary

Metrics

Defect analysis report

Bucket testing

Bucket testing(also known as A/B Testing) is mostly used to study the impact of various product designes in website metrics, two simultaneous versions were run in a single or set of webpages to measure the difference in click rates,interface and traffic.

Different Types of Severity

User interface Defects - low

Boundary related defects - Medium

Error handling defects - Medium

Calculation defects - High

Interpreting Data defects - High

Hardware Failure and Problems - High

Compatibility and intersystem defect - High

Control flow defects - High

Load conditions(Memory leakages under load testing) - High

Boundary related defects - Medium

Error handling defects - Medium

Calculation defects - High

Interpreting Data defects - High

Hardware Failure and Problems - High

Compatibility and intersystem defect - High

Control flow defects - High

Load conditions(Memory leakages under load testing) - High

Tuesday, April 19, 2011

Complete guide on testing web application

Before start to test web application we should have indepth knowledge in key elements,approriate approach and knowledge. while doing web based testing we have to concentrate more on below testing

Let’s have first web testing checklist.

1) Functionality Testing

2) Usability testing

3) Interface testing

4) Compatibility testing

5) Performance testing

6) Security testing

1) Functionality Testing:

Test for – all the links in web pages, database connection, forms used in the web pages for submitting or getting information from user, Cookie testing.

Check all the links:

Test the outgoing links from all the pages from specific domain under test.

Test all internal links.

Test links jumping on the same pages.

Test links used to send the email to admin or other users from web pages.

Test to check if there are any orphan pages.

Lastly in link checking, check for broken links in all above-mentioned links.

Test forms in all pages:

Forms are the integral part of any web site. Forms are used to get information from users and to keep interaction with them. So what should be checked on these forms?

First check all the validations on each field.

Check for the default values of fields.

Wrong inputs to the fields in the forms.

Options to create forms if any, form delete, view or modify the forms.

Let’s take example of the search engine project currently I am working on, In this project we have advertiser and affiliate sign up steps. Each sign up step is different but dependent on other steps. So sign up flow should get executed correctly. There are different field validations like email Ids, User financial info validations. All these validations should get checked in manual or automated web testing.

Cookies testing:

Cookies are small files stored on user machine. These are basically used to maintain the session mainly login sessions. Test the application by enabling or disabling the cookies in your browser options. Test if the cookies are encrypted before writing to user machine. If you are testing the session cookies (i.e. cookies expire after the sessions ends) check for login sessions and user stats after session end. Check effect on application security by deleting the cookies. (I will soon write separate article on cookie testing)

Validate your HTML/CSS:

If you are optimizing your site for Search engines then HTML/CSS validation is very important. Mainly validate the site for HTML syntax errors. Check if site is craw lable to different search engines.

Database testing:

Data consistency is very important in web application. Check for data integrity and errors while you edit, delete, modify the forms or do any DB related functionality.

Check if all the database queries are executing correctly, data is retrieved correctly and also updated correctly. More on database testing could be load on DB, we will address this in web load or performance testing below.

2) Usability Testing:

Test for navigation:

Navigation means how the user surfs the web pages, different controls like buttons, boxes or how user using the links on the pages to surf different pages.

Usability testing includes:

Web site should be easy to use. Instructions should be provided clearly. Check if the provided instructions are correct means whether they satisfy purpose.

Main menu should be provided on each page. It should be consistent.

Content checking:

Content should be logical and easy to understand. Check for spelling errors. Use of dark colors annoys users and should not be used in site theme. You can follow some standards that are used for web page and content building. These are common accepted standards like as I mentioned above about annoying colors, fonts, frames etc.

Content should be meaningful. All the anchor text links should be working properly. Images should be placed properly with proper sizes.

These are some basic standards that should be followed in web development. Your task is to validate all for UI testing

Other user information for user help:

Like search option, site map, help files etc. Site map should be present with all the links in web sites with proper tree view of navigation. Check for all links on the site map.

“Search in the site” option will help users to find content pages they are looking for easily and quickly. These are all optional items and if present should be validated.

3) Interface Testing:

The main interfaces are:

Web server and application server interface

Application server and Database server interface.

Check if all the interactions between these servers are executed properly. Errors are handled properly. If database or web server returns any error message for any query by application server then application server should catch and display these error messages appropriately to users. Check what happens if user interrupts any transaction in-between? Check what happens if connection to web server is reset in between?

4) Compatibility Testing:

Compatibility of your web site is very important testing aspect. See which compatibility test to be executed:

Browser compatibility

Operating system compatibility

Mobile browsing

Printing options

Browser compatibility:

In my web-testing career I have experienced this as most influencing part on web site testing.

Some applications are very dependent on browsers. Different browsers have different configurations and settings that your web page should be compatible with. Your web site coding should be cross browser platform compatible. If you are using Java scripts or AJAX calls for UI functionality, performing security checks or validations then give more stress on browser compatibility testing of your web application.

Test web application on different browsers like Internet explorer, Firefox, Netscape navigator, AOL, Safari, Opera browsers with different versions.

OS compatibility:

Some functionality in your web application is may not be compatible with all operating systems. All new technologies used in web development like graphics designs, interface calls like different API’s may not be available in all Operating Systems.

Test your web application on different operating systems like Windows, Unix, MAC, Linux, Solaris with different OS flavors.

Mobile browsing:

This is new technology age. So in future Mobile browsing will rock. Test your web pages on mobile browsers. Compatibility issues may be there on mobile.

Printing options:

If you are giving page-printing options then make sure fonts, page alignment, page graphics getting printed properly. Pages should be fit to paper size or as per the size mentioned in printing option.

5) Performance testing:

Web application should sustain to heavy load. Web performance testing should include:

Web Load Testing

Web Stress Testing

Test application performance on different Internet connection speed.

In web load testing test if many users are accessing or requesting the same page. Can system sustain in peak load times? Site should handle many simultaneous user requests, large input data from users, Simultaneous connection to DB, heavy load on specific pages etc.

Stress testing: Generally stress means stretching the system beyond its specification limits. Web stress testing is performed to break the site by giving stress and checked how system reacts to stress and how system recovers from crashes.

Stress is generally given on input fields, login and sign up areas.

In web performance testing web site functionality on different operating systems, different hardware platforms is checked for software, hardware memory leakage errors

6) Security Testing:

Following are some test cases for web security testing:

Test by pasting internal URL directly into browser address bar without login. Internal pages should not open.

If you are logged in using user name and password and browsing internal pages then try changing URL options directly. I.e. If you are checking some publisher site statistics with publisher site ID= 123. Try directly changing the URL site ID parameter to different site ID which is not related to logged in user. Access should denied for this user to view others stats.

Try some invalid inputs in input fields like login user name, password, input text boxes. Check the system reaction on all invalid inputs.

Web directories or files should not be accessible directly unless given download option.

Test the CAPTCHA for automates scripts logins.

Test if SSL is used for security measures. If used proper message should get displayed when user switch from non-secure http:// pages to secure https:// pages and vice versa.

All transactions, error messages, security breach attempts should get logged in log files somewhere on web server.

Let’s have first web testing checklist.

1) Functionality Testing

2) Usability testing

3) Interface testing

4) Compatibility testing

5) Performance testing

6) Security testing

1) Functionality Testing:

Test for – all the links in web pages, database connection, forms used in the web pages for submitting or getting information from user, Cookie testing.

Check all the links:

Test the outgoing links from all the pages from specific domain under test.

Test all internal links.

Test links jumping on the same pages.

Test links used to send the email to admin or other users from web pages.

Test to check if there are any orphan pages.

Lastly in link checking, check for broken links in all above-mentioned links.

Test forms in all pages:

Forms are the integral part of any web site. Forms are used to get information from users and to keep interaction with them. So what should be checked on these forms?

First check all the validations on each field.

Check for the default values of fields.

Wrong inputs to the fields in the forms.

Options to create forms if any, form delete, view or modify the forms.

Let’s take example of the search engine project currently I am working on, In this project we have advertiser and affiliate sign up steps. Each sign up step is different but dependent on other steps. So sign up flow should get executed correctly. There are different field validations like email Ids, User financial info validations. All these validations should get checked in manual or automated web testing.

Cookies testing:

Cookies are small files stored on user machine. These are basically used to maintain the session mainly login sessions. Test the application by enabling or disabling the cookies in your browser options. Test if the cookies are encrypted before writing to user machine. If you are testing the session cookies (i.e. cookies expire after the sessions ends) check for login sessions and user stats after session end. Check effect on application security by deleting the cookies. (I will soon write separate article on cookie testing)

Validate your HTML/CSS:

If you are optimizing your site for Search engines then HTML/CSS validation is very important. Mainly validate the site for HTML syntax errors. Check if site is craw lable to different search engines.

Database testing:

Data consistency is very important in web application. Check for data integrity and errors while you edit, delete, modify the forms or do any DB related functionality.

Check if all the database queries are executing correctly, data is retrieved correctly and also updated correctly. More on database testing could be load on DB, we will address this in web load or performance testing below.

2) Usability Testing:

Test for navigation:

Navigation means how the user surfs the web pages, different controls like buttons, boxes or how user using the links on the pages to surf different pages.

Usability testing includes:

Web site should be easy to use. Instructions should be provided clearly. Check if the provided instructions are correct means whether they satisfy purpose.

Main menu should be provided on each page. It should be consistent.

Content checking:

Content should be logical and easy to understand. Check for spelling errors. Use of dark colors annoys users and should not be used in site theme. You can follow some standards that are used for web page and content building. These are common accepted standards like as I mentioned above about annoying colors, fonts, frames etc.

Content should be meaningful. All the anchor text links should be working properly. Images should be placed properly with proper sizes.

These are some basic standards that should be followed in web development. Your task is to validate all for UI testing

Other user information for user help:

Like search option, site map, help files etc. Site map should be present with all the links in web sites with proper tree view of navigation. Check for all links on the site map.

“Search in the site” option will help users to find content pages they are looking for easily and quickly. These are all optional items and if present should be validated.

3) Interface Testing:

The main interfaces are:

Web server and application server interface

Application server and Database server interface.

Check if all the interactions between these servers are executed properly. Errors are handled properly. If database or web server returns any error message for any query by application server then application server should catch and display these error messages appropriately to users. Check what happens if user interrupts any transaction in-between? Check what happens if connection to web server is reset in between?

4) Compatibility Testing:

Compatibility of your web site is very important testing aspect. See which compatibility test to be executed:

Browser compatibility

Operating system compatibility

Mobile browsing

Printing options

Browser compatibility:

In my web-testing career I have experienced this as most influencing part on web site testing.

Some applications are very dependent on browsers. Different browsers have different configurations and settings that your web page should be compatible with. Your web site coding should be cross browser platform compatible. If you are using Java scripts or AJAX calls for UI functionality, performing security checks or validations then give more stress on browser compatibility testing of your web application.

Test web application on different browsers like Internet explorer, Firefox, Netscape navigator, AOL, Safari, Opera browsers with different versions.

OS compatibility:

Some functionality in your web application is may not be compatible with all operating systems. All new technologies used in web development like graphics designs, interface calls like different API’s may not be available in all Operating Systems.

Test your web application on different operating systems like Windows, Unix, MAC, Linux, Solaris with different OS flavors.

Mobile browsing:

This is new technology age. So in future Mobile browsing will rock. Test your web pages on mobile browsers. Compatibility issues may be there on mobile.

Printing options:

If you are giving page-printing options then make sure fonts, page alignment, page graphics getting printed properly. Pages should be fit to paper size or as per the size mentioned in printing option.

5) Performance testing:

Web application should sustain to heavy load. Web performance testing should include:

Web Load Testing

Web Stress Testing

Test application performance on different Internet connection speed.

In web load testing test if many users are accessing or requesting the same page. Can system sustain in peak load times? Site should handle many simultaneous user requests, large input data from users, Simultaneous connection to DB, heavy load on specific pages etc.

Stress testing: Generally stress means stretching the system beyond its specification limits. Web stress testing is performed to break the site by giving stress and checked how system reacts to stress and how system recovers from crashes.

Stress is generally given on input fields, login and sign up areas.

In web performance testing web site functionality on different operating systems, different hardware platforms is checked for software, hardware memory leakage errors

6) Security Testing:

Following are some test cases for web security testing:

Test by pasting internal URL directly into browser address bar without login. Internal pages should not open.

If you are logged in using user name and password and browsing internal pages then try changing URL options directly. I.e. If you are checking some publisher site statistics with publisher site ID= 123. Try directly changing the URL site ID parameter to different site ID which is not related to logged in user. Access should denied for this user to view others stats.

Try some invalid inputs in input fields like login user name, password, input text boxes. Check the system reaction on all invalid inputs.

Web directories or files should not be accessible directly unless given download option.

Test the CAPTCHA for automates scripts logins.

Test if SSL is used for security measures. If used proper message should get displayed when user switch from non-secure http:// pages to secure https:// pages and vice versa.

All transactions, error messages, security breach attempts should get logged in log files somewhere on web server.

Tuesday, April 12, 2011

Task- Based software testing

Once in a Software Testing conference held in Banglore, India, the topic of discussion was "How to test software’s impact on a system’s mission effectiveness?"

Mostly cutomers want systems that are:

- On-time

- Within budget

- That satisfy user requirements

- Reliable

Latter two concerns (out of above four) can be refined into two broad objectives for operational testing:

1. That a system’s performance satisfies its requirements as specified in the Operational Requirement Document and related documents.

2. To identify any serious deficiencies in the system design that need correction before full rate production.

Following the path from the system level to software two generic reasons for testing software are:

- Test for defects so they can be fixed - Debug Testing

- Test for confidence in the software - Operational Testing

Debug testing is usually conducted using a combination of functional test techniques and structural test techniques. The goal is to locate defects in the most cost-effective manner and correct the defects, ensuring the performance satisfies the user requirements.

Operational testing is based on the expected usage profile for a system. The goal is to estimate the confidence in a system, ensuring the system is reliable for its intended use.

Task-Based Testing is a variation on operational testing. The particular techniques are not new, rather it leverages commonly accepted techniques by placing them within the context of current operational and acquisition strategies.

Task-based testing, as the name implies, uses task analysis. This begins with a comprehensive framework for all of the tasks that the system will perform. Through a series of hierarchical task analyses, each unit within the service creates a Mission Essential Task List (Mission of System).

These lists only describe "what" needs to be done, not "how" or "who." Further task decomposition identifies the system and people required to carry out a mission essential task. Another level of decomposition results in the system tasks (i.e. functions) a system must provide. This is, naturally, the level in which developers and testers are most interested. From a tester’s perspective, this framework identifies the most important functions to test by correlating functions against the mission essential tasks a system is designed to support.

This is distinctly different from the typical functional testing or "test-to-spec" approach where each function or specification carries equal importance. Ideally, there should be no function or specification which does not contribute to a task, but in reality there are often requirements, specifications, and capabilities which do not or minimally support a mission essential task. Using task analysis, one identifies those functions impacting the successful completion of mission essential tasks and highlights them for testing.

Operational Profiles: The process of task analysis has great benefit in identifying what functions are the most important to test. However, the task analysis only identifies the mission essential tasks and functions, not their frequency of use. Greater utility can be gained by combining the mission essential tasks with an operational profile an estimate of the relative frequency of inputs that represent field use. This has several benefits:

1. Offers a basis for reliability assessment, so that the developer can have not only the assurance of having tried to improve the software, but also has an estimate of the reliability actually achieved.

2. Provides a common base for communicating with the developers about the intended use of the system and how it will be evaluated.

3. When software testing schedules and budgets are tightly constrained, this design yields the highest practical reliability because if failures are seen they would be the high frequency failures.

The first benefit has the advantage of applying statistical techniques in:

- The design of tests

- The analysis of resulting data

Software reliability estimation methods such as Task Analysis are available to estimate both the expected field reliability and the rate of growth in reliability. This directly supports an answer to the question about software’s impact on a system’s mission effectiveness.

Operational profiles are criticized as being difficult to develop. However, as part of its current operations and acquisition strategy, some organizations inherently develops an operational profile. At higher levels, this is reflected in the following documents:

- Analysis of Alternatives

- Operational Requirements Document (ORD)

- Operations Plans

- Concept of Operations (CONOPS) etc.

Closer to the tester’s realm is the interaction between the user and the developer which the current acquisition strategy encourages. The tester can act as a facilitator in helping the user refine his or her needs while providing insight to the developer on expected use. This highlights the second benefit above the communication between the user, developer, and tester.

Despite years of improvement in the software development process, one still sees systems which have gone through intensive debug testing (statement coverage, branch coverage, etc.) and "test-to-spec," but still fail to satisfy the customer’s concerns (that I mentioned above). By involving a customer early in the process to develop an operational profile, the most needed functions to support a task will be developed and tested first, increasing the likelihood of satisfying the customer’s four concerns. This third benefit is certainly of interest in today’s environment of shrinking budgets and manpower, shorter schedules (spiral acquisition), and greater demands on a system.

Task-Based Software Testing

Thus, Task-based software testing is the combination of a task analysis and an operational profile. The task analysis helps partition the input domain into mission essential tasks and the system functions which support them. Operational profiles, based on these tasks, are developed to further focus the testing effort.

Debug Testing